PowerShell equivalent to grep -f

Categories:

PowerShell's Equivalent to grep -f for Efficient List Filtering

Learn how to replicate the powerful grep -f command in PowerShell to filter content based on patterns from a file, enhancing your scripting and data processing capabilities.

The grep -f file command in Unix-like systems is a highly efficient way to filter lines from an input stream or file, where the patterns to match are read from another file. This is incredibly useful for tasks like whitelisting, blacklisting, or performing bulk lookups. While PowerShell doesn't have a direct one-to-one equivalent command, it offers several robust and idiomatic ways to achieve the same functionality, often with greater flexibility. This article will explore the most common and efficient methods to replicate grep -f in PowerShell, focusing on Select-String and custom scripting approaches.

Understanding grep -f

Before diving into PowerShell, let's briefly review what grep -f does. It takes a file, where each line in that file is treated as a pattern to search for in the input. If any line in the input matches any of the patterns from the pattern file, that input line is returned. This is particularly powerful when you have a large number of patterns or when the patterns themselves are dynamic.

flowchart TD

A["Input File/Stream"] --> B["Read Patterns from File (patterns.txt)"]

B --> C{"For each line in Input File"}

C --> D{"Does line match ANY pattern?"}

D -->|Yes| E["Output Matching Line"]

D -->|No| F["Discard Line"]

E --> G["End"]

F --> CConceptual flow of grep -f operation

Method 1: Using Select-String with Get-Content

The most direct PowerShell equivalent for grep is Select-String. To replicate grep -f, you can read the patterns from your pattern file and pass them as an array to the -Pattern parameter of Select-String. This method is generally efficient for a moderate number of patterns.

# Create a sample input file

'apple'

'banana'

'cherry'

'date'

'elderberry'

'fig' | Out-File -FilePath 'input.txt'

# Create a sample pattern file

'an'

'er' | Out-File -FilePath 'patterns.txt'

# Read patterns and use Select-String

$patterns = Get-Content -Path 'patterns.txt'

Get-Content -Path 'input.txt' | Select-String -Pattern $patterns -SimpleMatch | ForEach-Object { $_.Line }

Filtering input.txt using patterns from patterns.txt with Select-String

-SimpleMatch parameter in Select-String treats patterns as literal strings rather than regular expressions. This is often what you want when replicating grep -f behavior, as grep -f treats each line in the pattern file as a basic regular expression by default. If your patterns are complex regular expressions, omit -SimpleMatch.Method 2: Leveraging Where-Object for More Complex Logic

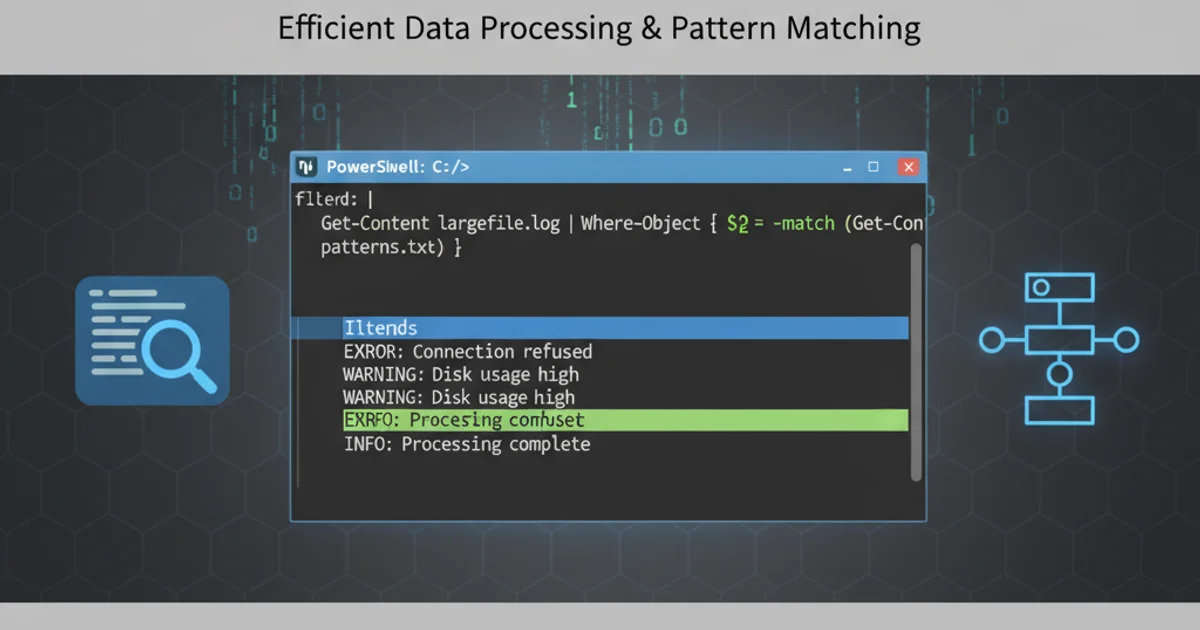

For scenarios where Select-String's regex capabilities aren't sufficient, or you need more control over the matching logic (e.g., case-insensitive matching, whole word matching, or combining multiple conditions), Where-Object provides a powerful alternative. This approach involves reading the patterns and then iterating through the input, checking each line against the loaded patterns.

# Using the same input.txt and patterns.txt from Method 1

$patterns = Get-Content -Path 'patterns.txt'

$regexPatterns = ($patterns | ForEach-Object { [regex]::Escape($_) }) -join '|'

Get-Content -Path 'input.txt' | Where-Object { $_ -match $regexPatterns }

Filtering with Where-Object and dynamically built regex

[regex]::Escape() to prevent unintended behavior. Otherwise, a pattern like . in your pattern file would match any character, not just a literal dot.Performance Considerations for Large Files and Many Patterns

For very large input files or pattern files, performance becomes a critical factor. While Select-String is often optimized, building a single large regex string for Where-Object can become slow or even hit limits if the number of patterns is extremely high. In such cases, a hash set lookup can offer superior performance.

# Create a large sample input file (e.g., 100,000 lines)

1..100000 | ForEach-Object { "Line-$_ with some content" } | Out-File -FilePath 'large_input.txt'

# Create a pattern file with specific lines to match

'Line-1000 with some content'

'Line-50000 with some content'

'Line-99999 with some content' | Out-File -FilePath 'large_patterns.txt'

# Using a HashSet for efficient lookup

$lookupPatterns = New-Object System.Collections.Generic.HashSet[string]

(Get-Content -Path 'large_patterns.txt') | ForEach-Object { $lookupPatterns.Add($_) }

Get-Content -Path 'large_input.txt' | Where-Object { $lookupPatterns.Contains($_) }

Using a HashSet for highly optimized exact line matching

HashSet approach is ideal when you need to match entire lines exactly. It offers O(1) average time complexity for lookups, making it extremely fast for large datasets. However, it does not support partial matches or regular expressions directly.