What is a mutex?

Categories:

Understanding Mutexes: Essential for Concurrent Programming

Explore what a mutex is, why it's crucial for managing shared resources in multithreaded applications, and how to implement it effectively to prevent race conditions and ensure data integrity.

In the world of multithreaded programming, where multiple threads of execution run concurrently and often share resources, ensuring data integrity and preventing unpredictable behavior is paramount. This is where synchronization primitives come into play, and among the most fundamental is the mutex (short for mutual exclusion). A mutex acts like a gatekeeper, allowing only one thread at a time to access a critical section of code or a shared resource, thereby preventing race conditions and ensuring predictable program execution.

What is a Mutex and Why Do We Need It?

A mutex is a locking mechanism used to synchronize access to a shared resource. Imagine a single restroom in a busy office; only one person can use it at a time. A mutex works similarly: when a thread wants to access a shared resource (like a global variable, a file, or a database connection), it first tries to acquire the mutex. If the mutex is available, the thread acquires it, enters the critical section, and locks the resource. If the mutex is already held by another thread, the requesting thread is blocked (or put to sleep) until the mutex is released.

Without mutexes, multiple threads could try to modify the same shared data simultaneously, leading to a race condition. A race condition occurs when the outcome of a program depends on the unpredictable relative timing of multiple threads. This can result in corrupted data, incorrect calculations, and difficult-to-debug errors.

flowchart TD

A[Thread 1 wants to access shared resource]

B[Thread 2 wants to access shared resource]

C{Mutex available?}

D[Thread 1 acquires mutex]

E[Thread 1 accesses shared resource (Critical Section)]

F[Thread 1 releases mutex]

G[Thread 2 acquires mutex]

H[Thread 2 accesses shared resource (Critical Section)]

I[Thread 2 releases mutex]

A --> C

B --> C

C -- Yes --> D

D --> E

E --> F

C -- No (Mutex held by Thread 1) --> B_Wait(Thread 2 waits)

F --> G

G --> H

H --> I

B_Wait --> GFlowchart illustrating how a mutex controls access to a shared resource between two threads.

How Mutexes Work: Lock and Unlock Operations

The fundamental operations associated with a mutex are lock() (or acquire()) and unlock() (or release()).

lock()/acquire(): When a thread callslock()on a mutex, it attempts to acquire ownership. If no other thread currently owns the mutex, the calling thread successfully acquires it and proceeds. If another thread already owns the mutex, the calling thread will block (pause its execution) until the mutex is released by the owner.unlock()/release(): Once a thread has finished its work within the critical section, it must callunlock()to release the mutex. This makes the mutex available for other waiting threads. If there are multiple threads waiting, typically one is chosen by the operating system to acquire the mutex next.

It's crucial that every lock() operation is paired with an unlock() operation. Failing to release a mutex can lead to deadlock, where threads indefinitely wait for a resource that will never be released, or livelock, where threads repeatedly attempt an operation that fails due to contention, without making progress.

#include <iostream>

#include <thread>

#include <mutex>

#include <vector>

std::mutex mtx;

int shared_data = 0;

void increment_data() {

for (int i = 0; i < 100000; ++i) {

mtx.lock(); // Acquire the mutex

shared_data++; // Critical section

mtx.unlock(); // Release the mutex

}

}

int main() {

std::vector<std::thread> threads;

for (int i = 0; i < 5; ++i) {

threads.emplace_back(increment_data);

}

for (std::thread& t : threads) {

t.join();

}

std::cout << "Final shared_data: " << shared_data << std::endl;

return 0;

}

C++ example demonstrating mutex usage to protect a shared integer from race conditions.

std::lock_guard or std::unique_lock in C++ to ensure mutexes are automatically released, even if exceptions occur. This greatly reduces the risk of forgetting to call unlock().Mutex vs. Semaphore: Key Differences

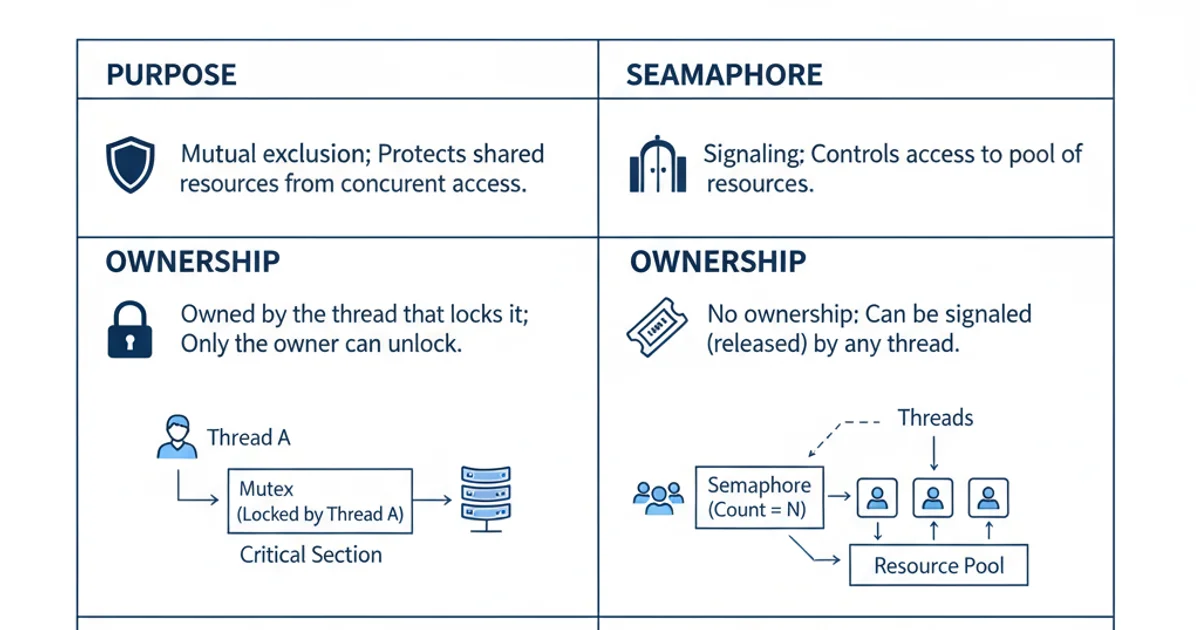

While both mutexes and semaphores are synchronization primitives, they serve slightly different purposes:

- Mutex: A mutex is primarily used for mutual exclusion. It's like a key to a single-occupancy restroom. Only one thread can hold the mutex at a time. It's typically used to protect a critical section of code or a shared resource.

- Semaphore: A semaphore is a signaling mechanism. It's like a counter for a multi-occupancy parking lot. It allows a specified number of threads (N) to access a resource concurrently. A binary semaphore (N=1) can behave like a mutex, but its primary use case is to control access to a pool of resources or to signal between threads.

The key distinction is ownership: a mutex has an owner (the thread that locked it), and only the owner can unlock it. A semaphore does not have ownership; any thread can signal (increment) or wait (decrement) on it, provided the count allows.

Mutex vs. Semaphore: Understanding their distinct roles in concurrency.