What is yarn-client mode in Spark?

Categories:

Understanding Spark's Yarn-Client Mode for Cluster Execution

Explore Spark's Yarn-Client mode, its architecture, execution flow, and when to use it for efficient cluster resource management.

Apache Spark is a powerful unified analytics engine for large-scale data processing. When running Spark applications on a cluster, a resource manager is essential for allocating resources and coordinating tasks. Apache Hadoop YARN (Yet Another Resource Negotiator) is a popular choice for this role. Spark offers different deployment modes when integrating with YARN, and one of the most common is Yarn-Client mode.

What is Yarn-Client Mode?

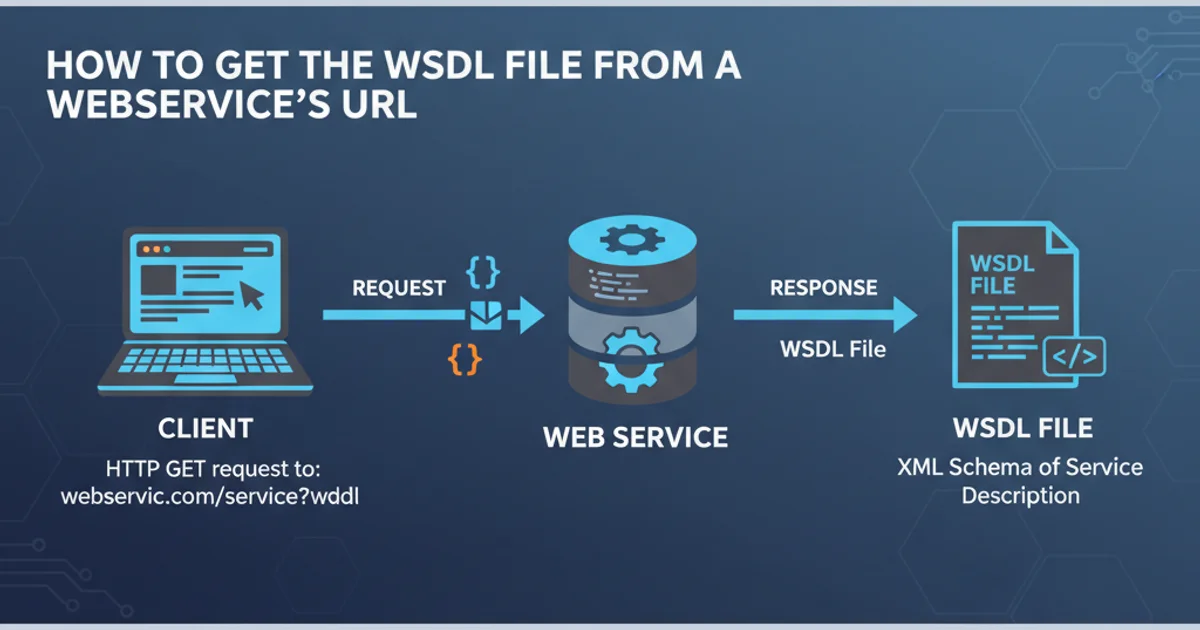

In Yarn-Client mode, the Spark driver program runs on the machine where the spark-submit command is executed (the 'client' machine). This means the client machine is responsible for initiating the Spark application, maintaining the SparkContext, and coordinating with the YARN ResourceManager. The actual Spark executors, which perform the data processing, are launched as YARN containers on the cluster's NodeManagers.

flowchart TD

A[Client Machine] --> B(spark-submit command)

B --> C{Spark Driver (on Client)}

C --> D[YARN ResourceManager]

D --> E[YARN NodeManager 1]

D --> F[YARN NodeManager 2]

E --> G[Spark Executor 1]

F --> H[Spark Executor 2]

G --"Heartbeats & Task Status"--> C

H --"Heartbeats & Task Status"--> C

C --"Request Resources"--> D

D --"Allocate Containers"--> E

D --"Allocate Containers"--> F

C --"Send Tasks"--> G

C --"Send Tasks"--> HSpark Yarn-Client Mode Architecture

Execution Flow in Yarn-Client Mode

When you submit a Spark application in Yarn-Client mode, the following sequence of events typically occurs:

- Driver Launch: The

spark-submitscript launches the Spark driver program on the client machine. - Resource Request: The Spark driver connects to the YARN ResourceManager and requests resources (CPU, memory) to launch the Spark executors.

- Container Allocation: The YARN ResourceManager allocates containers on various NodeManagers in the cluster.

- Executor Launch: The Spark driver instructs the NodeManagers to launch Spark executors within these allocated containers.

- Task Distribution: Once executors are running, the Spark driver distributes tasks to them for parallel processing.

- Monitoring and Coordination: The driver continuously monitors the progress of tasks, handles fault tolerance, and collects results. It maintains a persistent connection with the YARN ResourceManager and the executors.

- Application Completion: Upon completion, the driver terminates, and the YARN resources are released.

Advantages and Disadvantages

Yarn-Client mode offers several benefits but also comes with certain limitations:

Advantages:

- Interactive Development: Ideal for interactive analysis and debugging as the driver output is directly available on the client console.

- Local Resource Access: The driver can directly access local files and resources on the client machine.

- Simpler Setup: Can be simpler to set up for initial development and testing.

Disadvantages:

- Client Machine Dependency: The client machine becomes a single point of failure. If the client machine goes down, the entire Spark application fails.

- Network Latency: The driver's communication with executors across the network can introduce latency, especially if the client machine is far from the cluster.

- Resource Consumption: The client machine needs sufficient resources (CPU, memory) to run the Spark driver, which can be substantial for large applications.

- Long-Running Applications: Not suitable for long-running or production applications due to the client machine's fragility and potential for network issues.

spark-submit \

--class com.example.MySparkApp \

--master yarn \

--deploy-mode client \

--executor-memory 4G \

--num-executors 10 \

my-spark-app.jar \

arg1 arg2

Example spark-submit command for Yarn-Client mode