Why is LRU better than FIFO?

Categories:

Why LRU Caching Outperforms FIFO for Better Performance

Explore the fundamental differences between LRU (Least Recently Used) and FIFO (First-In, First-Out) caching algorithms and understand why LRU consistently delivers superior performance in most real-world scenarios.

Caching is a critical technique used to improve the performance of systems by storing frequently accessed data in a faster, more readily available location. When the cache is full and new data needs to be stored, a cache eviction policy determines which existing data block to remove. Two common policies are FIFO (First-In, First-Out) and LRU (Least Recently Used). While both aim to manage cache space, their approaches to eviction lead to significant differences in efficiency and hit rates. This article delves into why LRU generally provides a better caching strategy than FIFO.

Understanding FIFO: Simplicity with a Flaw

The FIFO caching algorithm is perhaps the simplest to implement. It operates on the principle that the first data block to enter the cache is the first one to be evicted when space is needed. Think of it like a queue: elements are added to one end and removed from the other. This policy is straightforward because it only requires tracking the order of insertion. However, its simplicity is also its biggest drawback.

flowchart LR

subgraph Cache

A["Block A (Oldest)"] --> B["Block B"]

B --> C["Block C (Newest)"]

end

D["New Block D"] --> E{Cache Full?}

E -->|Yes| F["Evict Block A"]

E -->|No| G["Add Block D to Cache"]

F --> GFIFO Cache Eviction Process: The oldest block is always evicted.

The fundamental flaw in FIFO is its disregard for access frequency. A data block that was inserted first might still be actively used. Evicting such a block leads to a 'cache miss' shortly after, requiring the system to fetch it again from slower storage. This phenomenon is often referred to as 'Belady's Anomaly' in some contexts, where increasing cache size can paradoxically lead to more cache misses with FIFO.

Understanding LRU: Prioritizing Recency for Better Hits

LRU, or Least Recently Used, takes a more intelligent approach. Instead of evicting the oldest block, it evicts the block that has not been accessed for the longest period of time. The core assumption behind LRU is that data that has been used recently is more likely to be used again in the near future (temporal locality). This assumption holds true for a vast majority of real-world access patterns, such as file system access, web page requests, and database queries.

flowchart LR

subgraph Cache

A["Block A (Least Recently Used)"] --> B["Block B"]

B --> C["Block C (Most Recently Used)"]

end

D["New Block D"] --> E{Cache Full?}

E -->|Yes| F["Evict Block A"]

E -->|No| G["Add Block D to Cache"]

H["Access Block B"] --> I["Move Block B to MRU position"]LRU Cache Eviction Process: The least recently used block is evicted. Accessing a block updates its recency.

Implementing LRU typically involves maintaining a list or queue of cache blocks, ordered by their last access time. When a block is accessed, it's moved to the 'most recently used' end of the list. When eviction is needed, the block at the 'least recently used' end is removed. This dynamic ordering ensures that frequently accessed items remain in the cache, leading to a higher cache hit rate and better overall performance.

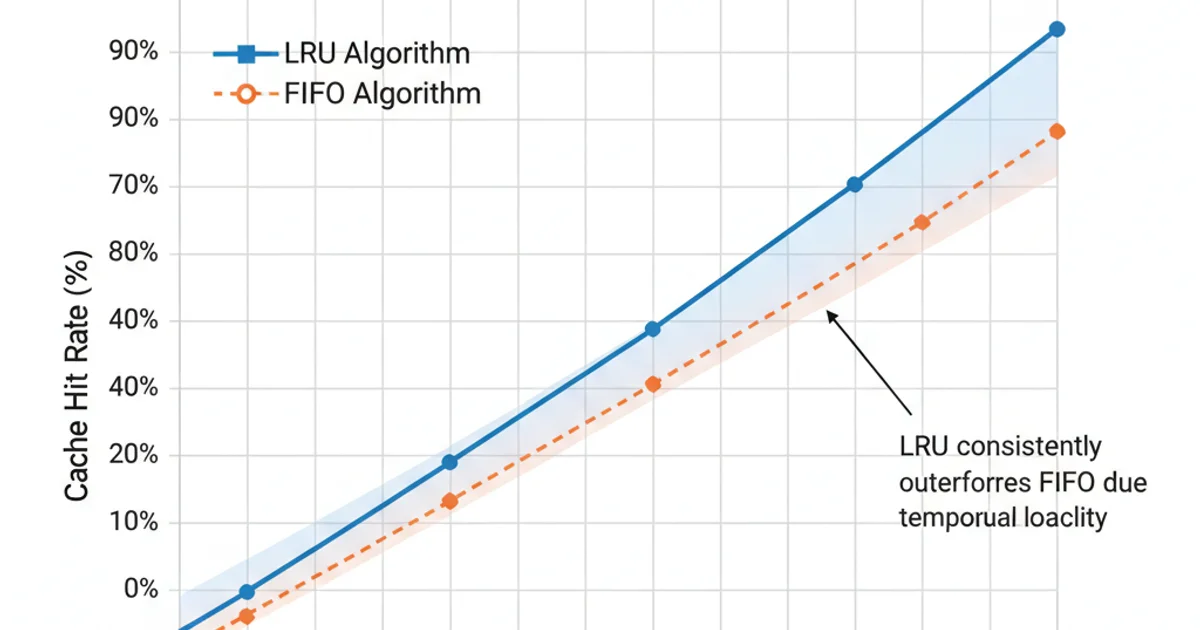

Performance Comparison: Why LRU Wins

The primary metric for evaluating a caching algorithm is its 'hit rate' – the percentage of times requested data is found in the cache. A higher hit rate means fewer expensive fetches from slower storage. Due to its intelligent eviction policy, LRU almost always achieves a higher hit rate than FIFO for the same cache size, especially with realistic access patterns.

Illustrative comparison of LRU vs. FIFO cache hit rates.

Consider a scenario where a small set of data blocks are accessed repeatedly. FIFO would eventually evict these frequently used blocks if they were among the first to enter the cache, even if they are constantly being re-accessed. LRU, on the other hand, would keep these 'hot' blocks in the cache, only evicting them if they truly fall out of recent use. This makes LRU much more resilient to fluctuating access patterns and better at retaining valuable data.

Practical Implementation Considerations

Implementing an LRU cache typically involves a combination of a hash map (for O(1) lookup of cache items) and a doubly linked list (for O(1) updates to recency). The hash map stores key-value pairs, where the value is a reference to a node in the linked list. The linked list maintains the order of recency, with the head being the most recently used and the tail being the least recently used.

from collections import OrderedDict

class LRUCache:

def __init__(self, capacity: int):

self.cache = OrderedDict()

self.capacity = capacity

def get(self, key: int) -> int:

if key not in self.cache:

return -1

self.cache.move_to_end(key) # Mark as recently used

return self.cache[key]

def put(self, key: int, value: int) -> None:

if key in self.cache:

self.cache.move_to_end(key) # Mark as recently used

self.cache[key] = value

if len(self.cache) > self.capacity:

self.cache.popitem(last=False) # Remove LRU item

# Example Usage:

lru_cache = LRUCache(2)

lru_cache.put(1, 1)

lru_cache.put(2, 2)

print(lru_cache.get(1)) # returns 1

lru_cache.put(3, 3) # evicts key 2

print(lru_cache.get(2)) # returns -1 (not found)

lru_cache.put(4, 4) # evicts key 1

print(lru_cache.get(1)) # returns -1 (not found)

print(lru_cache.get(3)) # returns 3

print(lru_cache.get(4)) # returns 4

A simple LRU cache implementation in Python using OrderedDict.