Why Spring's jdbcTemplate.batchUpdate() so slow?

Categories:

Unraveling Slow JdbcTemplate.batchUpdate() Performance in Spring

Explore common pitfalls and optimization strategies for improving the performance of JdbcTemplate.batchUpdate() in Spring applications, especially with MySQL.

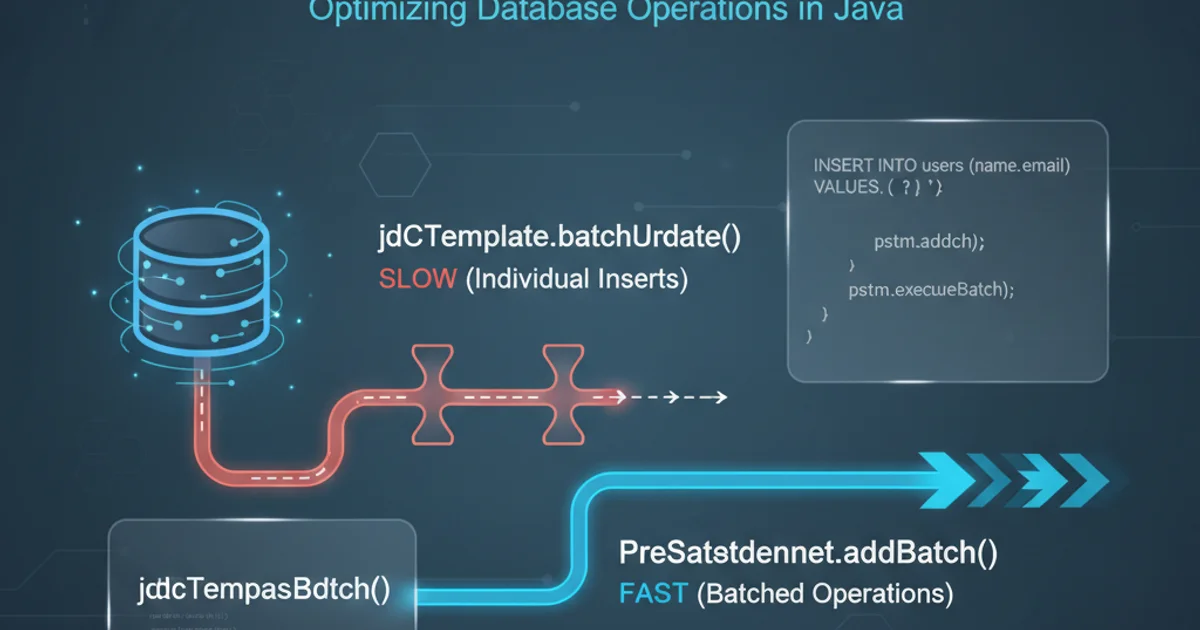

Spring's JdbcTemplate provides a powerful and convenient way to interact with relational databases. For bulk operations, batchUpdate() is often the go-to method. However, many developers encounter surprisingly slow performance when using it, particularly with large datasets or specific database configurations like MySQL. This article delves into the common reasons behind sluggish batchUpdate() performance and offers practical solutions to optimize your Spring data operations.

Understanding the batchUpdate() Mechanism

Before optimizing, it's crucial to understand how JdbcTemplate.batchUpdate() works under the hood. It leverages JDBC's addBatch() and executeBatch() methods. Ideally, this means sending multiple SQL statements to the database in a single network round trip, significantly reducing overhead compared to executing individual UPDATE or INSERT statements. However, several factors can prevent this ideal scenario from materializing, leading to poor performance.

sequenceDiagram

participant App as Spring Application

participant JDBC as JDBC Driver

participant DB as Database

App->>JDBC: jdbcTemplate.batchUpdate(sql, batchArgs)

loop For each argument set

JDBC->>JDBC: PreparedStatement.addBatch()

end

JDBC->>DB: PreparedStatement.executeBatch()

DB-->>JDBC: Batch execution results

JDBC-->>App: Return resultsTypical batchUpdate() sequence flow

Common Performance Bottlenecks

Several factors can turn an efficient batchUpdate() into a performance bottleneck. Identifying these is the first step towards resolution.

1. Auto-Commit Mode

By default, many JDBC drivers operate in auto-commit mode. This means every single SQL statement (even those within a batch) is committed immediately. This negates the performance benefits of batching, as each addBatch() call might implicitly trigger a transaction and commit, leading to frequent disk flushes and increased overhead.

2. JDBC Driver Configuration

Some JDBC drivers, especially older versions or those with default settings, might not properly support batching or might have small batch sizes configured internally. For MySQL, the rewriteBatchedStatements connection property is critical.

3. Database Indexing and Constraints

Heavy indexing, foreign key constraints, and unique constraints can significantly slow down bulk INSERT or UPDATE operations. Each modification requires the database to update indexes and validate constraints, which can be resource-intensive.

4. Network Latency

While batching aims to reduce network round trips, high network latency between your application and the database can still impact overall performance, especially if the effective batch size is small.

5. Large Object (LOB) Data

Inserting or updating large binary or text objects (BLOBs/CLOBs) within a batch can sometimes be slower due to how the JDBC driver and database handle these types.

Optimization Strategies

Here are proven strategies to improve the performance of JdbcTemplate.batchUpdate().

1. Disable Auto-Commit

This is perhaps the most crucial step. By disabling auto-commit, you allow the entire batch to be treated as a single transaction, committed only once after executeBatch() completes. Spring's transaction management usually handles this for you, but it's good to be aware of the underlying mechanism.

import org.springframework.transaction.annotation.Transactional;

import org.springframework.jdbc.core.JdbcTemplate;

import java.util.List;

public class MyRepository {

private final JdbcTemplate jdbcTemplate;

public MyRepository(JdbcTemplate jdbcTemplate) {

this.jdbcTemplate = jdbcTemplate;

}

@Transactional

public int[] batchUpdateData(String sql, List<Object[]> batchArgs) {

// Auto-commit will be disabled by Spring's transaction manager

// for the duration of this method.

return jdbcTemplate.batchUpdate(sql, batchArgs);

}

}

Using @Transactional to manage auto-commit for batch updates

2. Configure JDBC Driver for Batching (MySQL Specific)

For MySQL, ensure your JDBC connection URL includes rewriteBatchedStatements=true. This tells the driver to rewrite multiple INSERT statements into a single multi-value INSERT statement, which is significantly more efficient.

spring.datasource.url=jdbc:mysql://localhost:3306/mydb?rewriteBatchedStatements=true&useSSL=false

MySQL JDBC URL configuration for efficient batching

3. Optimize Database Schema and Operations

- Temporarily Disable Constraints/Indexes: For very large batch inserts, consider temporarily disabling foreign key checks and unique constraints, performing the batch operation, and then re-enabling them. This should be done with extreme caution and only if data integrity can be guaranteed by other means.

- Partitioning: For extremely large tables, consider database partitioning to manage data more efficiently.

- Batch Size: Experiment with the optimal batch size. Too small, and you increase network overhead. Too large, and you might hit memory limits or database transaction log limits. A common starting point is 1000-5000 records per batch.

4. Use executeBatch() Directly (Advanced)

While JdbcTemplate.batchUpdate() is convenient, for ultimate control, you can drop down to the raw JDBC PreparedStatement.addBatch() and executeBatch() methods. This allows fine-grained control over batch sizes and error handling.

import org.springframework.jdbc.core.BatchPreparedStatementSetter;

import org.springframework.jdbc.core.JdbcTemplate;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.util.List;

public class MyAdvancedRepository {

private final JdbcTemplate jdbcTemplate;

public MyAdvancedRepository(JdbcTemplate jdbcTemplate) {

this.jdbcTemplate = jdbcTemplate;

}

public int[] batchUpdateWithSetter(String sql, List<MyObject> data) {

return jdbcTemplate.batchUpdate(sql, new BatchPreparedStatementSetter() {

@Override

public void setValues(PreparedStatement ps, int i) throws SQLException {

MyObject obj = data.get(i);

ps.setString(1, obj.getName());

ps.setInt(2, obj.getValue());

// Set other parameters

}

@Override

public int getBatchSize() {

return data.size();

}

});

}

}

Using BatchPreparedStatementSetter for more control over batch parameters