What is the purpose of setting a key in data.table?

Categories:

Understanding the Purpose and Power of Keys in R's data.table

Explore why setting a key in data.table is crucial for performance optimization, efficient data manipulation, and streamlined operations in R.

In the realm of R programming, the data.table package stands out for its exceptional performance and concise syntax when handling large datasets. A fundamental concept within data.table that significantly contributes to its efficiency is the 'key'. While optional, setting a key can dramatically alter how data.table objects behave and perform. This article delves into the purpose of setting a key, its benefits, and how it underpins many of data.table's powerful features.

What is a Key in data.table?

A key in data.table is one or more columns that are designated as special. When you set a key, data.table internally sorts the data by these columns and stores metadata about this sorted order. This pre-sorting and indexing allow for highly optimized operations, particularly for subsetting, joining, and grouping. Think of it as creating an index on a database table – it doesn't change the data itself, but it makes finding and relating data much faster.

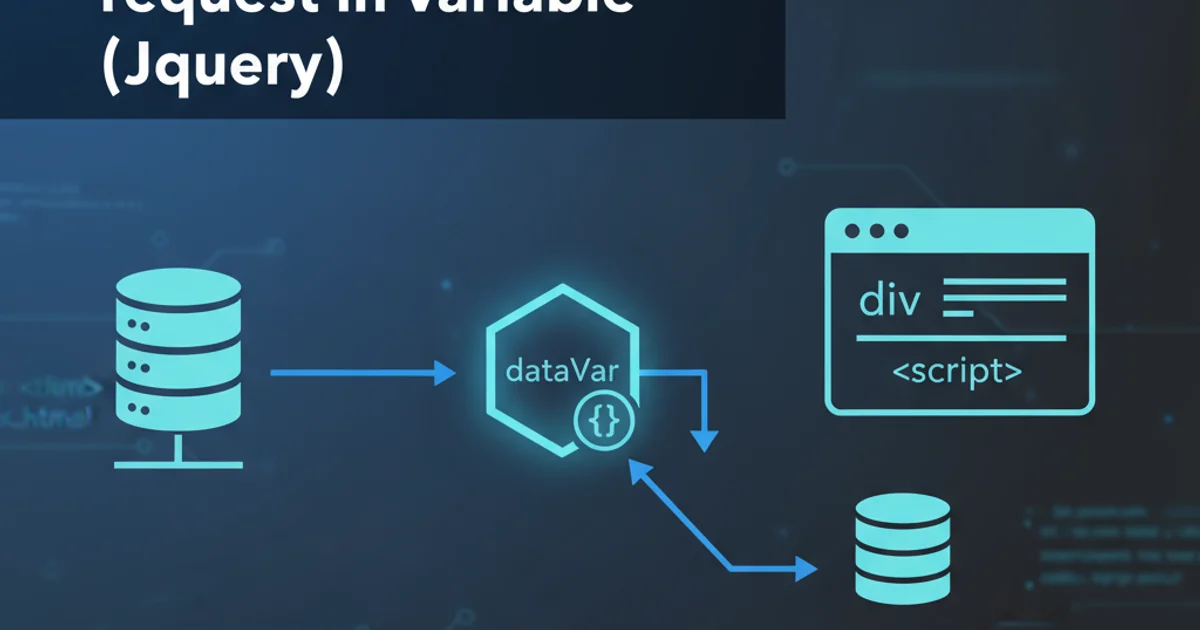

flowchart TD

A[data.table Object] --> B{Set Key (e.g., 'ID', 'Date')}

B --> C[Internal Sorting by Key Columns]

C --> D[Metadata Stored (Sorted Order)]

D --> E{Optimized Operations}

E --> F["Fast Subsetting (e.g., `DT[ID == 5]`)"]

E --> G["Efficient Joins (e.g., `DT1[DT2]`)"]

E --> H["Grouped Operations (e.g., `DT[, sum(Value), by=ID]`)"]

F & G & H --> I[Performance Boost]Conceptual flow of setting a key and its impact on data.table operations.

Key Benefits of Setting a Key

Setting a key offers several compelling advantages that are central to data.table's reputation for speed and efficiency:

1. Enhanced Performance for Subsetting and Filtering

When you subset a data.table using its key columns, data.table can leverage the pre-sorted order to quickly locate the relevant rows without scanning the entire table. This is particularly noticeable with large datasets where filtering on non-keyed columns would require a full table scan.

library(data.table)

# Create a large data.table

DT <- data.table(ID = rep(1:10000, each = 100),

Value = rnorm(1000000),

Category = sample(LETTERS, 1000000, replace = TRUE))

# --- Without a key ---

system.time(DT[ID == 5000])

# --- With a key ---

system.time(setkey(DT, ID)) # Set 'ID' as key

system.time(DT[ID == 5000]) # Much faster subsetting

Demonstrating performance difference in subsetting with and without a key.

2. Optimized Joins and Merges

One of the most powerful applications of keys is in joining (merging) data.table objects. When both data.tables involved in a join operation are keyed by the columns used for the join, data.table can perform extremely fast merges using a binary search algorithm. This avoids the need for re-sorting during the join, which is a common bottleneck in other data manipulation packages.

library(data.table)

DT1 <- data.table(ID = 1:10, Value1 = rnorm(10))

DT2 <- data.table(ID = 5:14, Value2 = runif(10))

# --- Join without keys (slower) ---

system.time(merge(DT1, DT2, by = "ID"))

# --- Join with keys (faster) ---

setkey(DT1, ID)

setkey(DT2, ID)

system.time(DT1[DT2]) # data.table's optimized join syntax

Comparing join performance with and without keys using data.table's syntax.

3. Efficient Grouped Operations

While data.table's by argument for grouped operations is already highly optimized, setting a key on the grouping columns can provide an additional performance boost. When the data is already sorted by the grouping columns, data.table can process groups sequentially without needing to re-sort or hash the grouping variables for each operation.

library(data.table)

DT <- data.table(Group = rep(1:1000, each = 1000),

Value = rnorm(1000000))

# --- Grouped operation without key ---

system.time(DT[, sum(Value), by = Group])

# --- Grouped operation with key ---

system.time(setkey(DT, Group))

system.time(DT[, sum(Value), by = Group])

Illustrating the impact of a key on grouped aggregations.

4. Uniqueness and Data Integrity

Although not its primary purpose, a key can implicitly enforce uniqueness if you set a single column as a key and then attempt to add duplicate key values. While data.table doesn't strictly prevent duplicates in a key by default (it will simply have multiple rows with the same key value), the unique() function and specific join types can leverage the key for unique operations. More importantly, when a key is set, data.table can quickly identify and work with unique combinations of key columns.

data.table can have only one key at a time. If you call setkey() again with different columns, the previous key is overwritten. If you need to perform operations optimized by different sets of columns, you might consider creating secondary indices using setindex() (available in newer data.table versions) which don't sort the data but provide similar lookup benefits.