Using convolution kernel to blur a grey-scale image

Categories:

Blurring Grayscale Images with Convolution Kernels in Java

Learn how to apply a convolution kernel to blur a grayscale image using Java, covering the fundamental concepts of image processing and matrix operations.

Image blurring is a common operation in digital image processing, often used for noise reduction, artistic effects, or preparing images for further analysis. One of the most fundamental techniques for blurring is convolution, which involves applying a mathematical operation (a kernel or filter) to each pixel of an image. This article will guide you through the process of implementing a grayscale image blur using convolution kernels in Java.

Understanding Convolution and Kernels

Convolution is a mathematical operation that takes two functions and produces a third function that expresses how the shape of one is modified by the other. In image processing, one function is the image itself, and the other is the convolution kernel (also known as a filter matrix). The kernel is a small matrix of values that defines the effect of the convolution. For blurring, the kernel typically contains positive values that average the surrounding pixel intensities.

When a kernel is applied to an image, it's centered over each pixel. The pixel's new value is calculated by multiplying each kernel element by the corresponding pixel value in the image region covered by the kernel, and then summing these products. This sum is then often divided by the sum of all kernel elements to normalize the output and maintain brightness.

flowchart TD

A[Input Grayscale Image] --> B{Select Pixel (x,y)}

B --> C[Center Kernel over (x,y)]

C --> D{Multiply Kernel elements by corresponding Image pixels}

D --> E[Sum all products]

E --> F{Divide by Kernel Sum (Normalization)}

F --> G[Assign result to Output Image pixel (x,y)]

G --> H{All Pixels Processed?}

H -->|No| B

H -->|Yes| I[Blurred Grayscale Image]Convolution process for image blurring

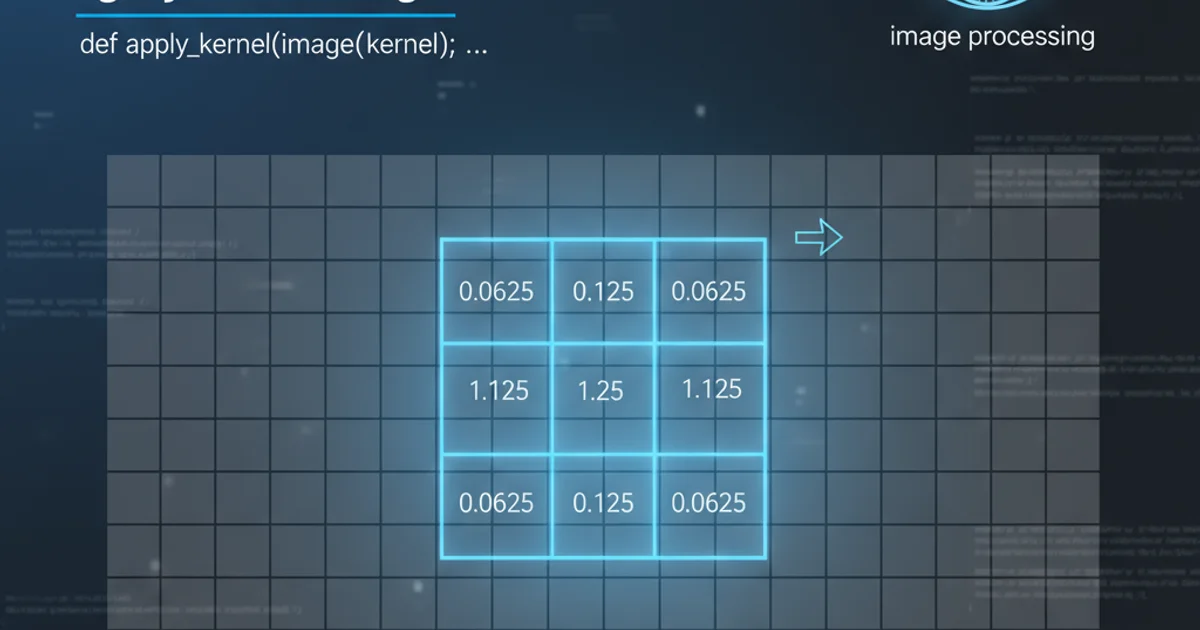

Designing a Blurring Kernel

A common blurring kernel is a simple averaging filter. For a 3x3 kernel, each element might be 1, and the sum of the kernel elements would be 9. This means each new pixel value is the average of itself and its 8 neighbors. Larger kernels (e.g., 5x5) produce a stronger blur effect. Gaussian blur kernels are also popular, using a Gaussian distribution to give more weight to the central pixel and less to those further away, resulting in a smoother blur.

For this example, we'll use a basic 3x3 averaging kernel:

double[][] blurKernel = {

{1.0/9.0, 1.0/9.0, 1.0/9.0},

{1.0/9.0, 1.0/9.0, 1.0/9.0},

{1.0/9.0, 1.0/9.0, 1.0/9.0}

};

A 3x3 averaging blur kernel

Implementing Convolution in Java

To implement convolution, you'll need to iterate through each pixel of the input image. For each pixel, you'll apply the kernel. Special care must be taken for pixels near the image edges, as the kernel might extend beyond the image boundaries. Common approaches include padding the image with zeros, replicating edge pixels, or simply skipping the edge pixels (which will result in a slightly smaller output image or black borders).

For simplicity, our example will handle edge cases by clamping the kernel's access to within the image bounds, effectively replicating edge pixels. We'll assume the input is a grayscale image represented by a 2D array of integer pixel values (0-255).

public class ImageBlurrer {

public static int[][] applyConvolution(int[][] originalImage, double[][] kernel) {

int width = originalImage[0].length;

int height = originalImage.length;

int[][] blurredImage = new int[height][width];

int kernelWidth = kernel[0].length;

int kernelHeight = kernel.length;

int kernelCenterX = kernelWidth / 2;

int kernelCenterY = kernelHeight / 2;

for (int y = 0; y < height; y++) {

for (int x = 0; x < width; x++) {

double sum = 0.0;

for (int ky = 0; ky < kernelHeight; ky++) {

for (int kx = 0; kx < kernelWidth; kx++) {

int pixelX = x - kernelCenterX + kx;

int pixelY = y - kernelCenterY + ky;

// Handle image boundaries (clamping)

if (pixelX < 0) pixelX = 0;

if (pixelX >= width) pixelX = width - 1;

if (pixelY < 0) pixelY = 0;

if (pixelY >= height) pixelY = height - 1;

sum += originalImage[pixelY][pixelX] * kernel[ky][kx];

}

}

// Ensure pixel value stays within 0-255 range

blurredImage[y][x] = Math.min(255, Math.max(0, (int) Math.round(sum)));

}

}

return blurredImage;

}

public static void main(String[] args) {

// Example grayscale image (5x5 pixels)

int[][] originalImage = {

{100, 110, 120, 130, 140},

{105, 115, 125, 135, 145},

{110, 120, 130, 140, 150},

{115, 125, 135, 145, 155},

{120, 130, 140, 150, 160}

};

double[][] blurKernel = {

{1.0/9.0, 1.0/9.0, 1.0/9.0},

{1.0/9.0, 1.0/9.0, 1.0/9.0},

{1.0/9.0, 1.0/9.0, 1.0/9.0}

};

int[][] blurredImage = applyConvolution(originalImage, blurKernel);

System.out.println("Original Image:");

printImage(originalImage);

System.out.println("\nBlurred Image:");

printImage(blurredImage);

}

private static void printImage(int[][] image) {

for (int[] row : image) {

for (int pixel : row) {

System.out.printf("%4d", pixel);

}

System.out.println();

}

}

}

BufferedImage objects, you would extract pixel data, convert to grayscale if necessary, apply the convolution, and then write the modified pixel data back to a new BufferedImage.Further Enhancements

This basic implementation provides a solid foundation. You can extend it by:

- Handling Color Images: Apply the convolution separately to each color channel (Red, Green, Blue).

- Different Kernels: Experiment with Gaussian blur kernels, sharpening kernels, edge detection kernels, etc.

- Performance Optimization: For very large images, consider using parallel processing or optimized libraries.

- Edge Handling Strategies: Implement different methods for dealing with image boundaries, such as zero-padding or wrapping.

Convolution is a powerful and versatile tool in image processing, forming the basis for many advanced filters and effects.