Fastest pairwise distance metric in python

Categories:

Optimizing Pairwise Distance Calculations in Python

Explore the fastest methods for computing pairwise distances between data points in Python, leveraging NumPy, SciPy, and scikit-learn for efficiency.

Calculating pairwise distances is a fundamental operation in many machine learning algorithms, data analysis tasks, and scientific computing. Whether you're working on clustering, classification, or dimensionality reduction, efficiently computing these distances can significantly impact performance. This article delves into various Python libraries and techniques to achieve the fastest possible pairwise distance calculations, focusing on Euclidean distance as a common example.

Understanding Pairwise Distance

Pairwise distance refers to the distance between every possible pair of points within a given set of data. For a dataset with N points, this results in N * (N - 1) / 2 unique distances (or N * N if including self-distances and symmetric pairs). The choice of distance metric (e.g., Euclidean, Manhattan, Cosine) depends on the nature of your data and the problem you're trying to solve. Euclidean distance is often the default due to its intuitive geometric interpretation.

flowchart TD

A[Input Data (N points, D dimensions)] --> B{Choose Distance Metric}

B --> C{Euclidean Distance}

B --> D{Manhattan Distance}

B --> E{Other Metrics}

C --> F[Calculate Distance for each pair (P1, P2)]

D --> F

E --> F

F --> G["Output: Distance Matrix (N x N)"]General workflow for pairwise distance calculation

Python Libraries for Efficiency

Python's strength in numerical computing comes from its powerful libraries. For pairwise distance calculations, NumPy, SciPy, and scikit-learn are the primary tools. Each offers optimized implementations that far surpass pure Python loops in performance. Understanding when to use each is key to maximizing efficiency.

NumPy: The Foundation

While NumPy doesn't have a direct pairwise_distance function, it provides the building blocks for highly efficient calculations. You can implement Euclidean distance using basic array operations. This approach is often surprisingly fast for smaller to medium-sized datasets, especially when memory allows for broadcasting.

import numpy as np

def numpy_pairwise_euclidean(X):

# Calculate squared Euclidean distance using broadcasting

# (a - b)^2 = a^2 - 2ab + b^2

# Sum of squares for each row

X_sq = np.sum(X**2, axis=1, keepdims=True)

# Dot product for 2ab term

dot_product = np.dot(X, X.T)

# Resulting squared distances

distances_sq = X_sq - 2 * dot_product + X_sq.T

# Ensure non-negative and take square root

distances = np.sqrt(np.maximum(distances_sq, 0))

return distances

# Example usage:

X = np.random.rand(100, 3) # 100 points, 3 dimensions

dists = numpy_pairwise_euclidean(X)

print(dists.shape)

NumPy implementation of pairwise Euclidean distance using broadcasting.

SciPy: Optimized Distance Functions

SciPy's scipy.spatial.distance module is specifically designed for distance calculations and offers a wide range of metrics. The pdist function computes distances between all pairs of observations in a given dataset, returning a condensed distance matrix. cdist computes distances between all pairs across two different datasets. For a square distance matrix, squareform can convert the condensed output of pdist.

from scipy.spatial.distance import pdist, squareform, cdist

import numpy as np

# Example data

X = np.random.rand(100, 3)

Y = np.random.rand(50, 3)

# Using pdist for distances within one set (condensed form)

condensed_dists = pdist(X, metric='euclidean')

# Convert to square matrix if needed

square_dists = squareform(condensed_dists)

print(f"pdist (condensed) shape: {condensed_dists.shape}")

print(f"squareform (square) shape: {square_dists.shape}")

# Using cdist for distances between two sets

cross_dists = cdist(X, Y, metric='euclidean')

print(f"cdist shape: {cross_dists.shape}")

Using SciPy's pdist and cdist for pairwise distance calculations.

pdist is generally the most memory-efficient option when you only need the upper triangle of the distance matrix (condensed form). If you require the full square matrix, squareform can convert it, but this will consume more memory.scikit-learn: Machine Learning Context

Scikit-learn's sklearn.metrics.pairwise module provides functions like euclidean_distances and pairwise_distances. These are often wrappers around SciPy's implementations but are integrated into the scikit-learn ecosystem, making them convenient for machine learning workflows. They can also handle sparse matrices, which is crucial for certain types of data.

from sklearn.metrics.pairwise import euclidean_distances, pairwise_distances

import numpy as np

# Example data

X = np.random.rand(100, 3)

Y = np.random.rand(50, 3)

# Using euclidean_distances (specific to Euclidean)

sklearn_euclidean_dists = euclidean_distances(X, X)

print(f"sklearn euclidean_distances shape: {sklearn_euclidean_dists.shape}")

# Using pairwise_distances (more general, can specify metric)

sklearn_general_dists = pairwise_distances(X, Y, metric='euclidean')

print(f"sklearn pairwise_distances shape: {sklearn_general_dists.shape}")

Pairwise distance calculation using scikit-learn.

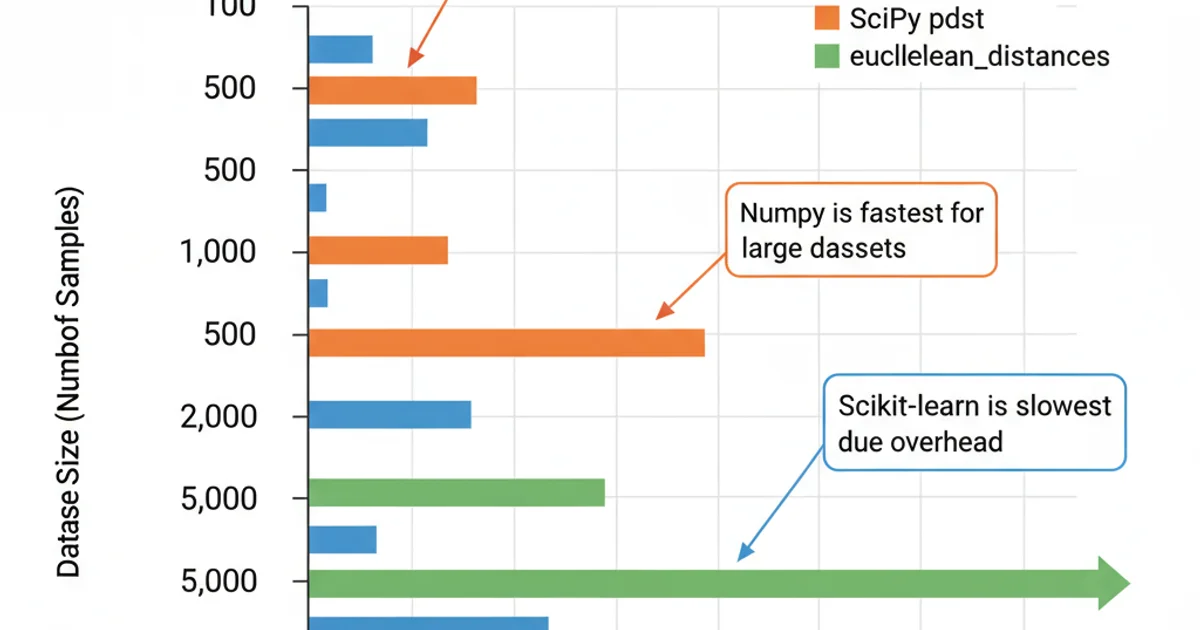

Performance Comparison and Best Practices

For dense arrays, SciPy's pdist (followed by squareform if a full matrix is needed) or cdist are typically the fastest and most robust options. Scikit-learn's euclidean_distances is also highly optimized. The pure NumPy approach can be competitive for Euclidean distance, especially if you're already comfortable with its broadcasting mechanics and want to avoid an extra dependency for simple cases.

When choosing an approach, consider:

- Dataset Size: For very large datasets, memory becomes a critical factor.

pdist's condensed output can save memory. - Metric: SciPy and scikit-learn offer a wider range of metrics out-of-the-box.

- Sparse Data: If your data is sparse, scikit-learn's

pairwise_distancesis often the best choice as it can handlescipy.sparsematrices efficiently. - Context: If you're already using scikit-learn for other tasks, its

pairwise_distancesmight be more convenient for consistency.

Conceptual performance comparison of different methods for pairwise Euclidean distance.

N points will be N x N. For N = 100,000, this is 10^10 elements. If each is a 64-bit float (8 bytes), that's 80 GB of RAM, which is often prohibitive. Consider approximate nearest neighbor methods or chunking for such cases.