Removing duplicates in lists

Categories:

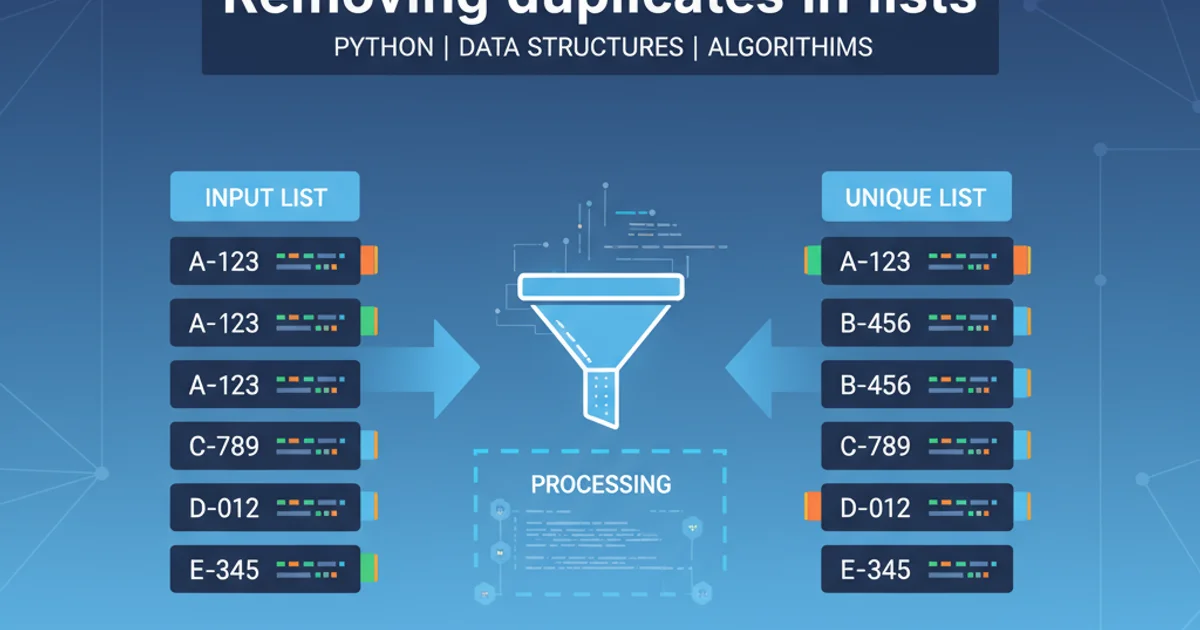

Efficiently Removing Duplicates from Python Lists

Explore various Python techniques for eliminating duplicate elements from lists, understanding their performance implications and use cases.

Removing duplicate elements from a list is a common task in programming. Whether you're cleaning data, preparing unique identifiers, or optimizing memory usage, Python offers several straightforward and efficient methods to achieve this. This article will delve into the most popular techniques, discuss their underlying mechanisms, and provide guidance on when to use each one, including performance considerations.

Understanding the Problem: Why Duplicates Matter

Duplicates can lead to incorrect calculations, skewed statistics, and inefficient processing. For instance, if you're counting unique visitors to a website, a list of IP addresses with duplicates would give an inaccurate total. Similarly, processing redundant data can waste computational resources and increase execution time. Python's flexibility allows for multiple approaches, each with its own trade-offs in terms of readability, performance, and order preservation.

flowchart TD

A[Start with List] --> B{Contains Duplicates?}

B -- Yes --> C[Choose Method]

C --> D{Preserve Order?}

D -- Yes --> E[Use `set` then `list` (if order not critical)]

D -- Yes --> F[Use Loop + New List (if order critical)]

D -- No --> G[Use `set` (most efficient for uniqueness)]

E --> H[Result: Unique List (Order not guaranteed)]

F --> I[Result: Unique List (Order preserved)]

G --> H

B -- No --> J[List is already unique]

H --> K[End]

I --> K

J --> KDecision flow for removing duplicates from a list

Method 1: Using set() (Order Not Preserved)

The most Pythonic and often the most efficient way to remove duplicates is by converting the list to a set and then back to a list. Sets are unordered collections of unique elements, meaning they inherently do not allow duplicates. This method is excellent for performance, especially with large lists, but it does not preserve the original order of elements.

my_list = [1, 2, 2, 3, 4, 4, 5]

unique_list = list(set(my_list))

print(unique_list)

# Output: [1, 2, 3, 4, 5] (order may vary)

Removing duplicates using set()

Method 2: Using a Loop and a New List (Order Preserved)

If preserving the original order of elements is crucial, you can iterate through the list and append each element to a new list only if it hasn't been added before. This approach is more explicit and guarantees order preservation but can be less performant than using sets for very large lists, as it involves repeated lookups in the new list.

my_list = [1, 2, 2, 3, 4, 4, 5]

unique_list = []

for item in my_list:

if item not in unique_list:

unique_list.append(item)

print(unique_list)

# Output: [1, 2, 3, 4, 5]

Removing duplicates while preserving order using a loop

Method 3: Using dict.fromkeys() (Order Preserved, Python 3.7+)

For Python versions 3.7 and later, dict.fromkeys() provides an elegant and efficient way to remove duplicates while preserving order. Dictionaries, from Python 3.7 onwards, maintain insertion order. When dict.fromkeys() is called with a list, it creates a dictionary where each element from the list becomes a key. Since dictionary keys must be unique, duplicates are automatically handled, and the order of the first occurrence is preserved. Converting this dictionary back to a list gives the desired result.

my_list = [1, 2, 2, 3, 4, 4, 5]

unique_list = list(dict.fromkeys(my_list))

print(unique_list)

# Output: [1, 2, 3, 4, 5]

Removing duplicates using dict.fromkeys()

Performance Considerations

The choice of method often depends on the size of your list and whether order preservation is a requirement. For very large lists, the set() conversion is typically the fastest because set operations are highly optimized. When order must be preserved, dict.fromkeys() (Python 3.7+) offers a good balance of performance and readability. The loop-based approach, while clear, can become slow for extremely large lists due to the in operator's linear search time.

1. Identify Duplicates

First, determine if your list contains duplicate elements. This can be done visually for small lists or programmatically by comparing the length of the list to the length of its set conversion.

2. Choose a Method

Decide whether preserving the original order of elements is critical. If not, use the set() conversion. If order is important and you're using Python 3.7+, dict.fromkeys() is a strong choice. Otherwise, a loop with a new list or a collections.OrderedDict (for older Python versions) can be used.

3. Implement and Test

Apply the chosen method to your list and test the output to ensure all duplicates are removed and the desired order (or lack thereof) is achieved. Consider edge cases like empty lists or lists with all identical elements.