How to pass data.frame for UPDATE with R DBI

Categories:

Efficiently Updating Databases with R: Passing DataFrames for DBI UPDATE Operations

Learn how to effectively use R's DBI package to perform UPDATE operations on a database, leveraging data from R data.frames. This guide covers common challenges and best practices for robust data manipulation.

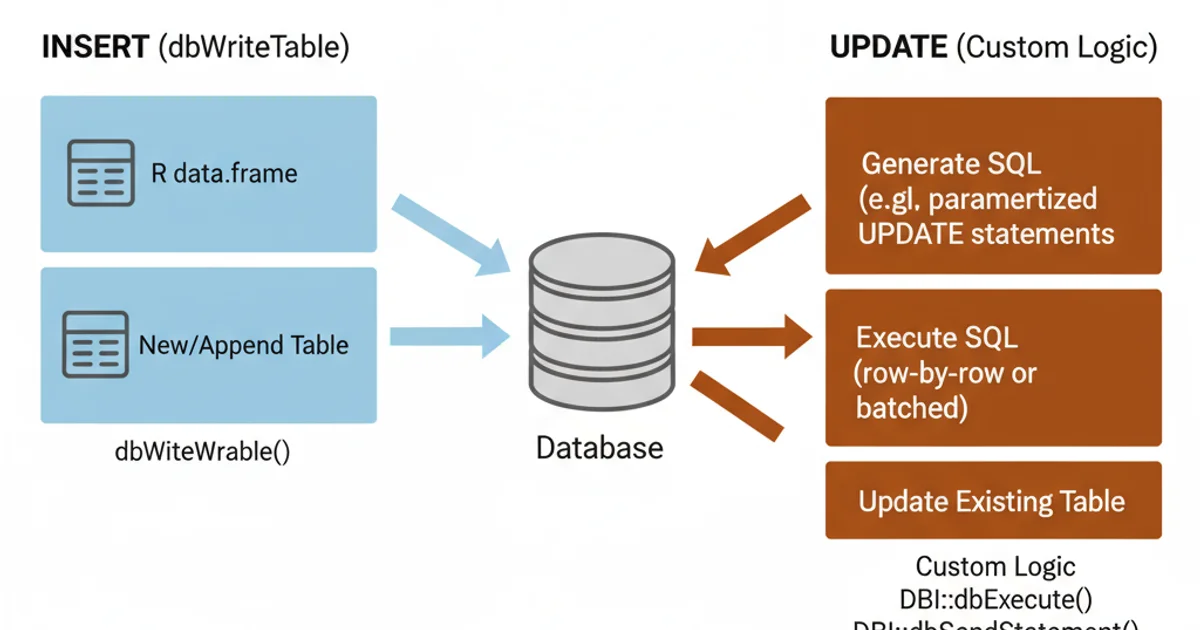

Updating records in a database is a common task in data management. When working with R, the DBI package provides a consistent interface for interacting with various database systems. While inserting data from a data.frame is straightforward with dbWriteTable(), performing UPDATE operations using data.frame content requires a more nuanced approach. This article will guide you through the process, addressing how to construct and execute UPDATE statements dynamically based on your R data.frame.

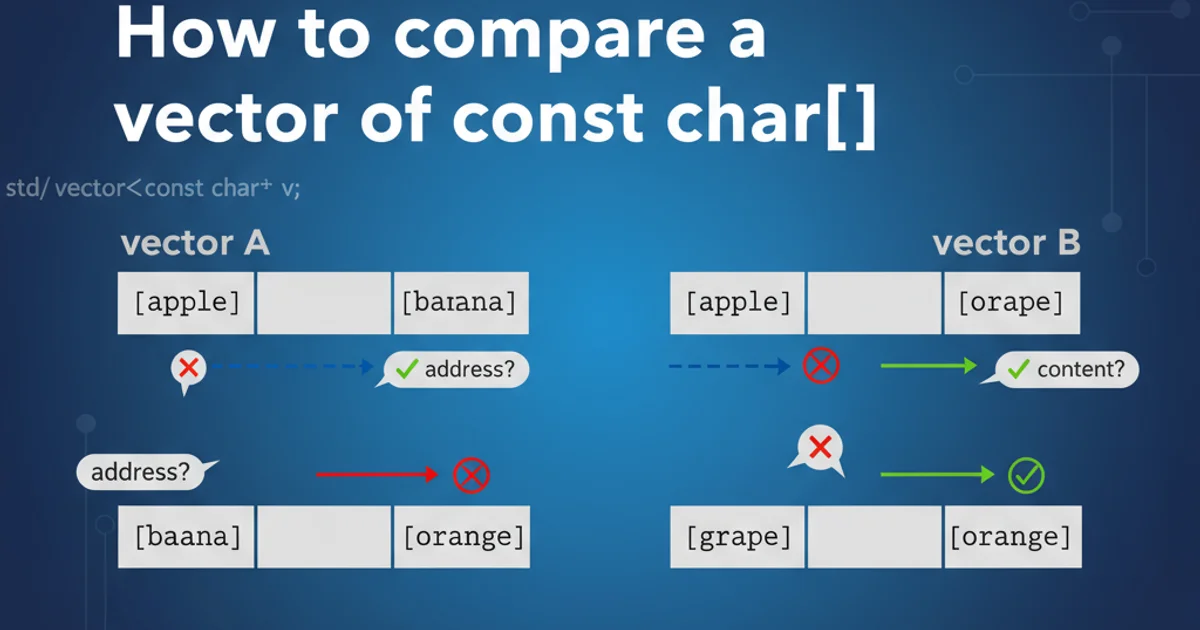

Understanding the Challenge: dbWriteTable() vs. UPDATE

The DBI package excels at bulk data operations, particularly for inserting new records. The dbWriteTable() function is designed to create new tables or append rows to existing ones. However, there isn't a direct dbUpdateTable() equivalent that takes a data.frame and automatically generates UPDATE statements based on primary keys or specific conditions. This is because UPDATE operations are inherently more complex, requiring explicit specification of which columns to update and under what conditions (the WHERE clause).

Comparison of dbWriteTable() for inserts and custom logic for UPDATE operations.

Strategy 1: Iterating and Executing Individual UPDATE Statements

One common approach is to iterate through the rows of your data.frame, constructing and executing an UPDATE statement for each row. This method offers fine-grained control over each update, allowing for complex WHERE clauses. However, it can be inefficient for very large data.frames due to the overhead of multiple database round trips.

library(DBI)

library(RSQLite)

# 1. Establish a database connection (example with SQLite)

con <- dbConnect(RSQLite::SQLite(), ":memory:")

# 2. Create a sample table and insert some initial data

dbExecute(con, "CREATE TABLE my_table (id INTEGER PRIMARY KEY, name TEXT, value REAL)")

dbExecute(con, "INSERT INTO my_table (id, name, value) VALUES (1, 'Alpha', 10.5)")

dbExecute(con, "INSERT INTO my_table (id, name, value) VALUES (2, 'Beta', 20.0)")

dbExecute(con, "INSERT INTO my_table (id, name, value) VALUES (3, 'Gamma', 30.2)")

# 3. Create a data.frame with updated values

update_df <- data.frame(

id = c(1, 3),

name = c('Alpha_Updated', 'Gamma_New'),

value = c(12.3, 35.7)

)

# 4. Iterate and update

for (i in 1:nrow(update_df)) {

row_data <- update_df[i, ]

sql_query <- sprintf(

"UPDATE my_table SET name = %s, value = %s WHERE id = %s",

dbQuoteString(con, row_data$name),

dbQuoteLiteral(con, row_data$value),

dbQuoteLiteral(con, row_data$id)

)

message(paste("Executing:", sql_query))

dbExecute(con, sql_query)

}

# 5. Verify the updates

dbGetQuery(con, "SELECT * FROM my_table")

# 6. Disconnect

dbDisconnect(con)

Iterating through a data.frame to generate and execute individual UPDATE statements.

dbQuoteString() for character values and dbQuoteLiteral() for numeric/boolean values when constructing SQL queries dynamically. This prevents SQL injection vulnerabilities and handles special characters correctly.Strategy 2: Batch UPDATE with Temporary Tables (Advanced)

For very large data.frames, executing individual UPDATE statements can be prohibitively slow. A more performant approach involves using a temporary table. The general workflow is:

- Write your

data.frameto a temporary table in the database. - Execute a single

UPDATEstatement that joins your main table with the temporary table, updating records based on matching keys. - Drop the temporary table.

library(DBI)

library(RSQLite)

# 1. Establish a database connection

con <- dbConnect(RSQLite::SQLite(), ":memory:")

# 2. Create a sample table and insert some initial data

dbExecute(con, "CREATE TABLE my_table (id INTEGER PRIMARY KEY, name TEXT, value REAL)")

dbExecute(con, "INSERT INTO my_table (id, name, value) VALUES (1, 'Alpha', 10.5)")

dbExecute(con, "INSERT INTO my_table (id, name, value) VALUES (2, 'Beta', 20.0)")

dbExecute(con, "INSERT INTO my_table (id, name, value) VALUES (3, 'Gamma', 30.2)")

# 3. Create a data.frame with updated values

update_df <- data.frame(

id = c(1, 3),

name = c('Alpha_Batch', 'Gamma_Batch'),

value = c(15.0, 38.0)

)

# 4. Write the update_df to a temporary table

temp_table_name <- "temp_updates"

dbWriteTable(con, temp_table_name, update_df, overwrite = TRUE, temporary = TRUE)

# 5. Construct and execute a single batch UPDATE statement

# Note: SQL syntax for JOIN-UPDATE varies slightly by database (e.g., MySQL, PostgreSQL, SQL Server)

# This example uses a common SQLite/PostgreSQL-like syntax.

sql_batch_update <- sprintf(

"UPDATE my_table

SET name = (SELECT T.name FROM %s AS T WHERE T.id = my_table.id),

value = (SELECT T.value FROM %s AS T WHERE T.id = my_table.id)

WHERE id IN (SELECT id FROM %s)",

dbQuoteIdentifier(con, temp_table_name),

dbQuoteIdentifier(con, temp_table_name),

dbQuoteIdentifier(con, temp_table_name)

)

message(paste("Executing batch update:\n", sql_batch_update))

dbExecute(con, sql_batch_update)

# 6. Verify the updates

dbGetQuery(con, "SELECT * FROM my_table")

# 7. Disconnect (temporary table is automatically dropped)

dbDisconnect(con)

Using a temporary table for efficient batch UPDATE operations.

Handling NULL Values and Data Types

When updating, pay close attention to NULL values and data type conversions. R's NA values typically map to SQL NULL. Ensure that the columns in your data.frame match the expected data types in your database table to avoid errors or unexpected behavior. dbQuoteLiteral() and dbQuoteString() help manage this by correctly formatting values for SQL.

RODBC users, the approach is similar but uses sqlQuery() or odbcUpdate() (if available and suitable for your specific needs). However, DBI is generally recommended for its modern interface and broader database support.1. Step 1: Establish Connection

Use dbConnect() to establish a connection to your database. Ensure you have the appropriate DBI backend package installed (e.g., RSQLite, RPostgres, RMySQL).

2. Step 2: Prepare Data

Create an R data.frame containing the id (or primary key) of the records to be updated, along with the new values for the columns you wish to change.

3. Step 3: Choose Update Strategy

Decide between iterating with individual UPDATE statements (simpler, good for small updates) or using a temporary table for batch updates (more complex, better for large datasets).

4. Step 4: Construct and Execute SQL

Dynamically build your UPDATE SQL queries using sprintf() and protect your values with dbQuoteString() or dbQuoteLiteral() to prevent SQL injection. Execute with dbExecute().

5. Step 5: Verify and Disconnect

After the update, run a SELECT query to verify the changes. Always remember to close your database connection using dbDisconnect().