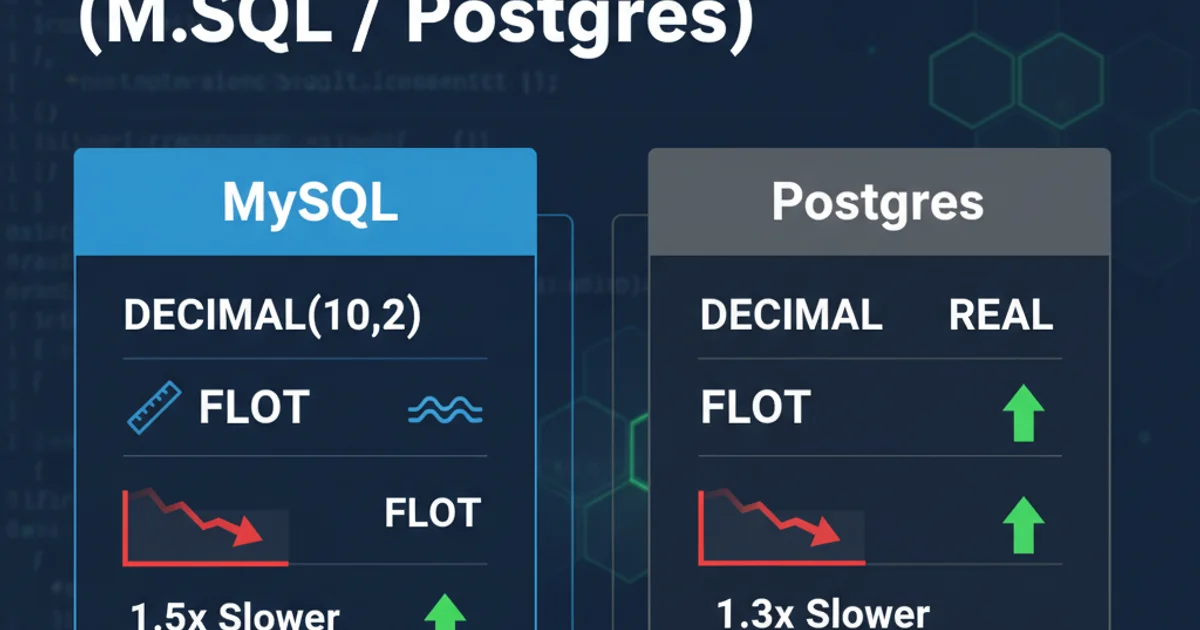

Is there a performance hit using decimal data types (MySQL / Postgres)

Decimal Data Types: Performance Impact in MySQL and PostgreSQL

Explore the performance implications of using DECIMAL data types in MySQL and PostgreSQL, understanding when and why they might introduce overhead compared to floating-point types.

When designing database schemas, choosing the correct data type for numerical values is crucial. For financial data, precise measurements, or any scenario where exact arithmetic is paramount, the DECIMAL (or NUMERIC) data type is often recommended. However, a common concern among developers is whether using DECIMAL introduces a significant performance overhead compared to approximate numeric types like FLOAT or DOUBLE. This article delves into the performance characteristics of DECIMAL in both MySQL and PostgreSQL, providing insights into its internal handling and practical implications.

Understanding DECIMAL Data Types

DECIMAL data types store exact numeric values. Unlike floating-point numbers (which store approximations), DECIMAL values are stored as a string or binary representation that preserves every digit's precision. This is essential for applications where rounding errors are unacceptable, such as monetary calculations. Both MySQL and PostgreSQL implement DECIMAL similarly, though their internal storage mechanisms and optimization strategies may differ slightly.

flowchart TD

A[Data Type Choice] --> B{Exact Precision Needed?}

B -- Yes --> C[Use DECIMAL/NUMERIC]

B -- No --> D{Approximate Precision OK?}

D -- Yes --> E[Use FLOAT/DOUBLE]

D -- No --> F[Integer Types]

C --> G[Performance Considerations]

E --> G

F --> GDecision flow for choosing numeric data types

Internal Representation and Performance Overhead

The primary reason for potential performance differences lies in how DECIMAL values are stored and processed. Floating-point numbers are typically stored in a fixed-size binary format (e.g., 4 or 8 bytes) that CPUs can process very quickly using dedicated floating-point units (FPUs). DECIMAL values, on the other hand, are often stored as a variable-length sequence of digits or in a packed binary format, requiring more complex software-based arithmetic operations.

In MySQL, DECIMAL values are stored as a binary string, where each group of nine decimal digits is packed into four bytes. Operations on these values involve converting them to an internal representation, performing calculations, and then converting them back. This process is inherently slower than direct hardware-level floating-point arithmetic.

PostgreSQL also stores NUMERIC (its equivalent of DECIMAL) in a variable-length format, often as an array of 16-bit 'digits' (base 10,000). This allows for arbitrary precision but similarly requires software-based arithmetic, which is more CPU-intensive than hardware-accelerated floating-point operations.

DECIMAL and FLOAT/DOUBLE is usually more pronounced in CPU-bound operations (heavy calculations) rather than I/O-bound operations (simple storage and retrieval).Practical Performance Implications

While DECIMAL operations are generally slower, the actual performance impact in a real-world application depends on several factors:

- Workload Type: For applications with infrequent numerical calculations or where calculations are performed on small datasets, the overhead of

DECIMALmight be negligible. - Number of Operations: If your application performs a very high volume of complex arithmetic operations on

DECIMALcolumns, the cumulative overhead can become noticeable. - Precision Requirements: The higher the precision and scale defined for a

DECIMALcolumn (e.g.,DECIMAL(30,15)vs.DECIMAL(5,2)), the more storage it consumes and the more complex the arithmetic operations become, potentially leading to greater performance differences. - Indexing: Indexing

DECIMALcolumns works similarly to other data types. The performance of index lookups is generally not significantly impacted by theDECIMALtype itself, but rather by the size and distribution of the data. - Storage:

DECIMALtypes can consume more storage space than fixed-sizeFLOAT/DOUBLEtypes, especially for high precision, which can indirectly affect I/O performance for very large tables.

-- MySQL Example: Creating tables with DECIMAL and FLOAT

CREATE TABLE products_decimal (

id INT PRIMARY KEY AUTO_INCREMENT,

price DECIMAL(10, 2),

quantity INT,

total_value DECIMAL(12, 2)

);

CREATE TABLE products_float (

id INT PRIMARY KEY AUTO_INCREMENT,

price FLOAT,

quantity INT,

total_value FLOAT

);

SQL schema examples for DECIMAL and FLOAT in MySQL

-- PostgreSQL Example: Creating tables with NUMERIC and REAL

CREATE TABLE products_numeric (

id SERIAL PRIMARY KEY,

price NUMERIC(10, 2),

quantity INT,

total_value NUMERIC(12, 2)

);

CREATE TABLE products_real (

id SERIAL PRIMARY KEY,

price REAL,

quantity INT,

total_value REAL

);

SQL schema examples for NUMERIC (DECIMAL) and REAL (FLOAT) in PostgreSQL

FLOAT or DOUBLE for monetary values or any data where exact precision is non-negotiable. The potential for rounding errors can lead to serious financial discrepancies.Benchmarking Considerations

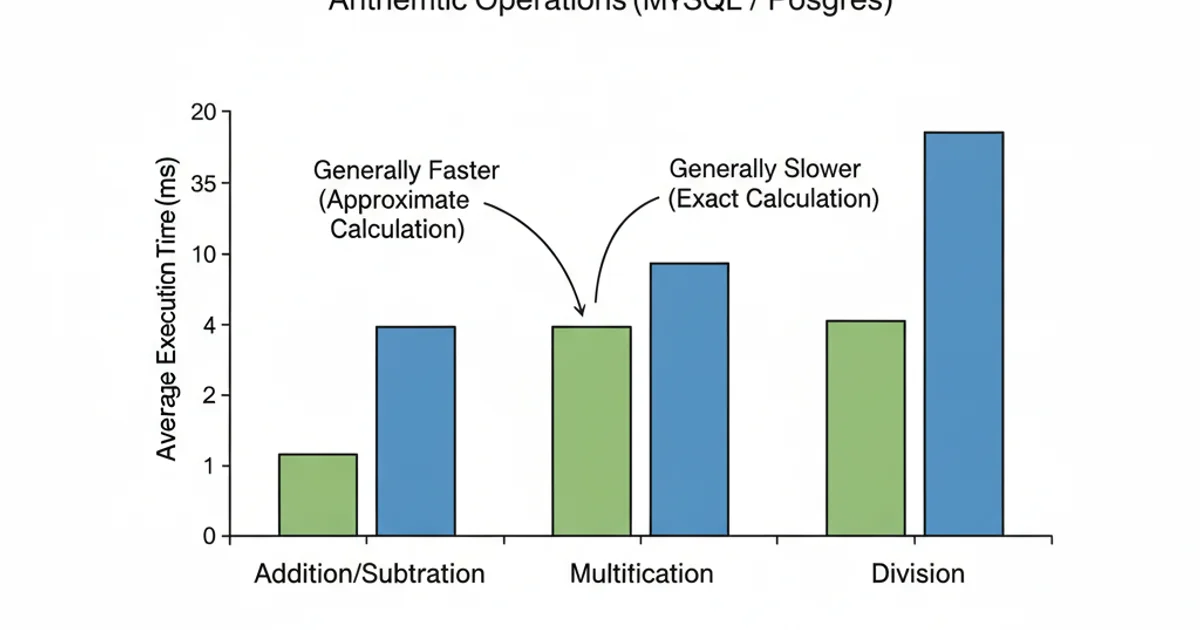

To accurately assess the performance impact for your specific use case, benchmarking is essential. Create test scenarios that mimic your application's typical workload, including data insertion, updates, and complex queries involving arithmetic operations on DECIMAL columns. Compare the execution times and resource consumption (CPU, memory) against equivalent tables using FLOAT or DOUBLE (if approximate values are acceptable for testing purposes).

Remember that the performance difference might be in milliseconds or microseconds per operation. For a system processing millions of transactions, these small differences can accumulate. However, for most web applications with typical database interactions, the performance hit from DECIMAL is often negligible compared to other bottlenecks like network latency, disk I/O, or inefficient queries.

Conceptual comparison of execution times for DECIMAL vs. FLOAT operations. Note: Actual results vary by database, hardware, and workload.

In conclusion, while DECIMAL data types do incur a performance overhead due to their software-based arbitrary precision arithmetic, this overhead is often acceptable and necessary for data integrity. Prioritize correctness and precision over marginal performance gains when dealing with critical numerical data. Only optimize away from DECIMAL if profiling clearly indicates it's a significant bottleneck and approximate values are genuinely acceptable for your use case.