consistent hashing vs. rendezvous (HRW) hashing - what are the tradeoffs?

Categories:

Consistent Hashing vs. Rendezvous Hashing: Choosing the Right Load Balancing Strategy

Explore the tradeoffs between Consistent Hashing and Rendezvous (HRW) Hashing for distributed systems, understanding their impact on load distribution, scalability, and operational complexity.

In distributed systems, efficiently distributing data or requests across a dynamic set of servers is a fundamental challenge. Load balancing algorithms play a crucial role in achieving this, aiming for even distribution, minimal data movement during scaling events, and high availability. Two prominent algorithms designed to address these challenges are Consistent Hashing and Rendezvous Hashing (also known as Highest Random Weight or HRW Hashing). While both aim to minimize key remapping when nodes are added or removed, they achieve this through different mechanisms, leading to distinct tradeoffs.

Understanding Consistent Hashing

Consistent Hashing maps both data items (keys) and cache servers (nodes) to a common hash space, typically a circular ring. Each key is placed on the ring based on its hash value, and each server is also placed on the ring based on its hash value. To find which server a key belongs to, you traverse the ring clockwise from the key's position until you encounter the first server. This server is responsible for that key. The primary advantage of this approach is that when a server is added or removed, only a small fraction of keys (those immediately adjacent to the changed server on the ring) need to be remapped, rather than a large percentage of the entire dataset.

flowchart LR

subgraph Consistent Hashing Ring

direction LR

K1(Key 1) --> S1(Server 1)

K2(Key 2) --> S2(Server 2)

K3(Key 3) --> S1

S1 --"Responsible for"--> K1

S1 --"Responsible for"--> K3

S2 --"Responsible for"--> K2

end

S1 --"Hash(S1)"--> H1[Hash Space]

S2 --"Hash(S2)"--> H2[Hash Space]

K1 --"Hash(K1)"--> HK1[Hash Space]

K2 --"Hash(K2)"--> HK2[Hash Space]

K3 --"Hash(K3)"--> HK3[Hash Space]

H1 --"Position on Ring"--> R1(Ring Position S1)

H2 --"Position on Ring"--> R2(Ring Position S2)

HK1 --"Position on Ring"--> RK1(Ring Position K1)

RK1 --"Clockwise to first server"--> R1

RK2 --"Clockwise to first server"--> R2

RK3 --"Clockwise to first server"--> R1Conceptual diagram of Consistent Hashing on a ring.

Understanding Rendezvous (HRW) Hashing

Rendezvous Hashing, also known as Highest Random Weight (HRW) Hashing, takes a different approach. Instead of mapping keys and servers to a single ring, it calculates a 'weight' for each server for a given key. For a specific key, each server computes a hash of the key combined with its own identifier (e.g., hash(key + server_id)). The server that produces the highest hash value (the 'highest random weight') for that key is chosen to handle it. When a server is added or removed, only the keys that would have been assigned to that specific server (or would now be assigned to it) are affected. This method inherently provides a more uniform distribution and doesn't require virtual nodes to achieve good balance.

flowchart TD

K(Key) --> S1_Hash["Hash(Key + Server1_ID)"]

K --> S2_Hash["Hash(Key + Server2_ID)"]

K --> S3_Hash["Hash(Key + Server3_ID)"]

S1_Hash --> W1(Weight 1)

S2_Hash --> W2(Weight 2)

S3_Hash --> W3(Weight 3)

W1 & W2 & W3 --> Max(Find Max Weight)

Max --> S_Chosen{Server with Max Weight}

S_Chosen --> Handle(Handle Key)Process flow for Rendezvous (HRW) Hashing.

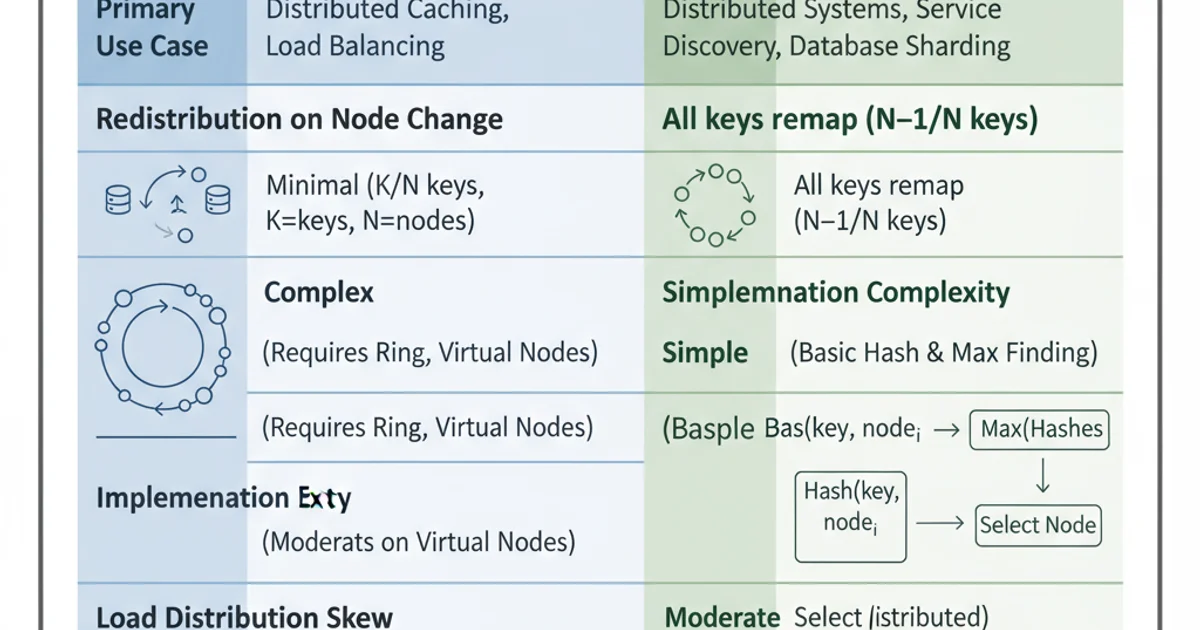

Tradeoffs and Comparison

Both algorithms are designed for distributed systems where nodes can join or leave dynamically. However, their characteristics lead to different strengths and weaknesses:

- Load Distribution: Rendezvous Hashing generally provides a more uniform distribution of keys across servers without the need for virtual nodes, especially with a small number of servers. Consistent Hashing often requires virtual nodes to achieve good balance and minimize variance.

- Complexity: Consistent Hashing, especially with virtual nodes, can be more complex to implement and manage due to the need to manage the ring and virtual node assignments. Rendezvous Hashing is often simpler to implement as it's a direct calculation for each key.

- Performance: For each key, Consistent Hashing typically involves a logarithmic search on the hash ring (if sorted) or iterating through virtual nodes. Rendezvous Hashing requires computing a hash for each server, which can be

O(N)whereNis the number of servers. For a very large number of servers, Consistent Hashing might offer better lookup performance, but for typical cluster sizes, HRW'sO(N)is often acceptable and can be optimized. - Monotonicity: Both algorithms are monotonic, meaning that when a server is added, no existing key is remapped to a different server than it was previously assigned to, except for those keys that are now assigned to the new server. When a server is removed, keys previously assigned to it are redistributed among the remaining servers. The key is that keys are only moved when absolutely necessary.

- Decentralization: Both can be implemented in a decentralized manner, where each client can independently determine which server owns a key, given the list of active servers. This avoids a single point of failure for the mapping logic.

Key differences between Consistent Hashing and Rendezvous Hashing.

When to Choose Which

The choice between Consistent Hashing and Rendezvous Hashing often depends on the specific requirements of your system:

Choose Consistent Hashing when:

- You need extremely fast key lookups, especially with a very large number of servers, and are willing to manage virtual nodes for better distribution.

- Your system frequently adds or removes nodes, and minimizing the number of remapped keys is paramount, even if it means more complex setup.

- You have a highly dynamic environment where server churn is common.

Choose Rendezvous Hashing when:

- Simplicity of implementation is a high priority.

- You need inherently better load distribution without the overhead of virtual nodes, especially for a moderate number of servers.

- The number of servers is not excessively large, making the

O(N)lookup acceptable. - You prefer a more mathematically elegant solution for distribution.

import hashlib

def consistent_hash_example(key, nodes):

# Simplified conceptual example, not a full consistent hash implementation

# In a real system, nodes would be mapped to multiple points (vnodes) on a ring

# and a binary search would find the responsible node.

key_hash = int(hashlib.sha1(key.encode()).hexdigest(), 16)

node_hashes = [(int(hashlib.sha1(node.encode()).hexdigest(), 16), node) for node in nodes]

node_hashes.sort()

for node_hash, node_name in node_hashes:

if key_hash <= node_hash:

return node_name

return node_hashes[0][1] # Wrap around the ring

def rendezvous_hash_example(key, nodes):

max_weight = -1

chosen_node = None

for node in nodes:

# Combine key and node ID, then hash

combined_string = f"{key}-{node}"

weight = int(hashlib.sha1(combined_string.encode()).hexdigest(), 16)

if weight > max_weight:

max_weight = weight

chosen_node = node

return chosen_node

# Example Usage

servers = ["server_A", "server_B", "server_C", "server_D"]

keys = ["user_1", "product_abc", "order_123", "session_xyz"]

print("\nConsistent Hashing Assignments:")

for k in keys:

print(f"Key '{k}' -> Server '{consistent_hash_example(k, servers)}'")

print("\nRendezvous Hashing Assignments:")

for k in keys:

print(f"Key '{k}' -> Server '{rendezvous_hash_example(k, servers)}'")

# Simulate adding a server

print("\n--- Adding server_E ---")

servers_new = servers + ["server_E"]

print("\nConsistent Hashing Assignments (with new server):")

for k in keys:

print(f"Key '{k}' -> Server '{consistent_hash_example(k, servers_new)}'")

print("\nRendezvous Hashing Assignments (with new server):")

for k in keys:

print(f"Key '{k}' -> Server '{rendezvous_hash_example(k, servers_new)}'")

Python examples demonstrating the core logic of Consistent Hashing (simplified) and Rendezvous Hashing.

consistent_hash_example is a highly simplified representation. A production-ready Consistent Hashing implementation would involve a more robust ring data structure, virtual nodes, and potentially a more sophisticated hashing algorithm to ensure even distribution and efficient lookups.