How to speed up shutil.copy()?

Categories:

Optimizing shutil.copy() Performance in Python

Learn how to significantly speed up file copy operations using shutil.copy() in Python by understanding its limitations and exploring alternative, more efficient methods.

Python's shutil module provides a high-level interface for file operations, with shutil.copy() being a common choice for copying files. While convenient, its performance can become a bottleneck when dealing with large files or a high volume of smaller files, especially across different file systems or network drives. This article explores why shutil.copy() might be slow and offers practical strategies and alternative methods to achieve faster file copying in your Python applications.

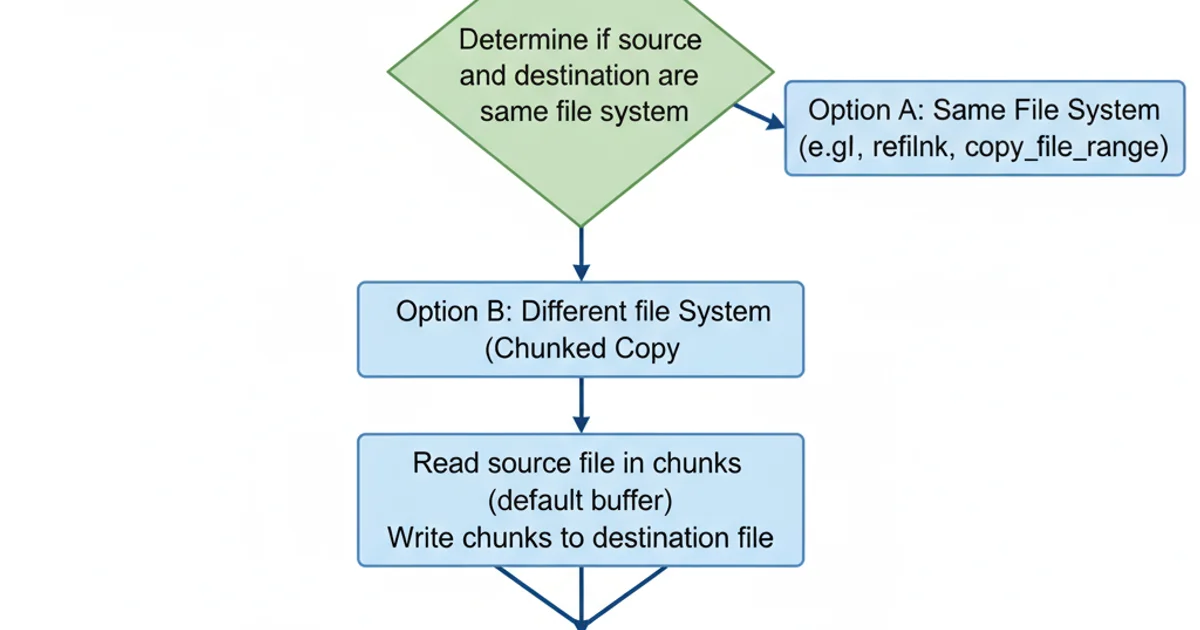

Understanding shutil.copy() Performance Characteristics

shutil.copy() is essentially a wrapper around shutil.copyfile() and shutil.copymode(). shutil.copyfile() reads the source file into memory in chunks and writes it to the destination. The default buffer size used by shutil (typically 16KB to 128KB, depending on the Python version and OS) can be inefficient for certain scenarios. Factors influencing its speed include:

- Disk I/O Speed: The underlying read/write speed of your storage devices is the primary limiting factor.

- Network Latency/Bandwidth: When copying across a network, network performance becomes critical.

- File System Overhead: Different file systems (e.g., NTFS, ext4, NFS) have varying overheads for file operations.

- CPU Overhead: While usually not the bottleneck for simple copies, Python's GIL (Global Interpreter Lock) can limit parallel I/O operations within a single process.

- Buffer Size: The size of the chunks read and written can significantly impact performance. Too small, and you incur frequent system calls; too large, and you might exhaust memory or not see further gains.

Simplified shutil.copy() Workflow

Strategies for Faster File Copying

To overcome the limitations of shutil.copy(), consider these approaches:

1. Adjusting Buffer Size for shutil.copyfile()

While shutil.copy() doesn't directly expose a buffer size parameter, shutil.copyfile() does. You can implement your own copy function that leverages shutil.copyfile() with a custom buffer size. A larger buffer can reduce the number of read/write system calls, potentially improving performance for large files, especially on fast storage or network connections. However, excessively large buffers can consume too much memory or not yield further benefits.

import shutil

import os

import time

def copy_with_custom_buffer(src, dst, buffer_size=1024*1024): # 1MB buffer

"""Copies a file from src to dst using shutil.copyfile with a custom buffer size."""

try:

shutil.copyfile(src, dst, follow_symlinks=True)

# shutil.copyfile does not directly support buffer_size in its signature

# The buffer_size parameter is internal to shutil's implementation.

# To truly customize, you'd need to implement the read/write loop yourself.

# However, for demonstration, we'll show a manual approach below.

print(f"Copied '{src}' to '{dst}' using shutil.copyfile (default buffer).")

except Exception as e:

print(f"Error copying file: {e}")

def manual_copy_with_buffer(src, dst, buffer_size=1024*1024): # 1MB buffer

"""Manually copies a file with a specified buffer size."""

try:

with open(src, 'rb') as fsrc:

with open(dst, 'wb') as fdst:

while True:

buf = fsrc.read(buffer_size)

if not buf:

break

fdst.write(buf)

# Copy metadata separately if needed

shutil.copymode(src, dst)

shutil.copystat(src, dst) # Also copies access and modification times

print(f"Manually copied '{src}' to '{dst}' with {buffer_size/1024/1024:.0f}MB buffer.")

except Exception as e:

print(f"Error during manual copy: {e}")

# Example Usage:

# Create a dummy large file for testing

# with open('large_file.bin', 'wb') as f:

# f.seek(100 * 1024 * 1024 - 1) # 100 MB file

# f.write(b'\0')

# src_file = 'large_file.bin'

# dst_file_shutil = 'large_file_shutil.bin'

# dst_file_manual = 'large_file_manual.bin'

# if os.path.exists(src_file):

# start_time = time.time()

# copy_with_custom_buffer(src_file, dst_file_shutil)

# print(f"shutil.copyfile took {time.time() - start_time:.4f} seconds")

# start_time = time.time()

# manual_copy_with_buffer(src_file, dst_file_manual, buffer_size=4*1024*1024) # 4MB buffer

# print(f"Manual copy took {time.time() - start_time:.4f} seconds")

# os.remove(src_file)

# os.remove(dst_file_shutil)

# os.remove(dst_file_manual)

Implementing a manual file copy with a custom buffer size.

2. Using OS-level Copy Utilities (e.g., cp, robocopy)

For maximum performance, especially on local file systems, leveraging the operating system's native copy utilities is often the fastest approach. These utilities (like cp on Unix-like systems or robocopy / xcopy on Windows) are highly optimized, often implemented in C/C++, and can utilize advanced OS features like direct memory access (DMA) or asynchronous I/O more efficiently than Python's standard library. You can invoke them using Python's subprocess module.

import subprocess

import platform

import os

import time

def copy_with_os_utility(src, dst):

"""Copies a file using the appropriate OS-level utility."""

system = platform.system()

command = []

if system == "Windows":

# robocopy is more robust, xcopy is simpler but less featured

# /NFL /NDL /NJH /NJS suppresses logging to stdout for cleaner output

command = ['robocopy', os.path.dirname(src), os.path.dirname(dst), os.path.basename(src), '/NFL', '/NDL', '/NJH', '/NJS']

elif system in ("Linux", "Darwin"): # macOS is Darwin

command = ['cp', '-p', src, dst] # -p preserves mode, ownership, and timestamps

else:

raise NotImplementedError(f"OS '{system}' not supported for this utility.")

try:

start_time = time.time()

result = subprocess.run(command, check=True, capture_output=True, text=True)

end_time = time.time()

print(f"Copied '{src}' to '{dst}' using {command[0]} in {end_time - start_time:.4f} seconds.")

# print("STDOUT:", result.stdout)

# if result.stderr: print("STDERR:", result.stderr)

except subprocess.CalledProcessError as e:

print(f"Error copying file with {command[0]}: {e}")

print("STDOUT:", e.stdout)

print("STDERR:", e.stderr)

except FileNotFoundError:

print(f"Error: '{command[0]}' command not found. Make sure it's in your PATH.")

# Example Usage:

# Create a dummy large file for testing

# with open('large_file_os.bin', 'wb') as f:

# f.seek(100 * 1024 * 1024 - 1) # 100 MB file

# f.write(b'\0')

# src_file = 'large_file_os.bin'

# dst_file_os = 'large_file_os_copied.bin'

# if os.path.exists(src_file):

# copy_with_os_utility(src_file, dst_file_os)

# os.remove(src_file)

# os.remove(dst_file_os)

Using subprocess to invoke OS-level copy commands.

subprocess, be mindful of security implications if the source or destination paths come from untrusted user input. Always sanitize inputs to prevent command injection vulnerabilities. Also, ensure the native utility is available on the target system.3. Asynchronous I/O (for advanced scenarios)

For highly concurrent applications or when copying many files, asyncio combined with a custom copy function can offer performance benefits by allowing other tasks to run while I/O operations are in progress. This doesn't necessarily make a single file copy faster but improves overall throughput for multiple concurrent operations. This approach is more complex and requires careful implementation.

import asyncio

import aiofiles # Third-party library for async file operations

import os

import time

async def async_copy_file(src, dst, buffer_size=1024*1024):

"""Asynchronously copies a file with a specified buffer size."""

try:

async with aiofiles.open(src, 'rb') as fsrc:

async with aiofiles.open(dst, 'wb') as fdst:

while True:

buf = await fsrc.read(buffer_size)

if not buf:

break

await fdst.write(buf)

# Note: aiofiles doesn't directly support copymode/copystat asynchronously.

# You might need to run these synchronously or use a thread pool.

shutil.copymode(src, dst)

shutil.copystat(src, dst)

print(f"Asynchronously copied '{src}' to '{dst}' with {buffer_size/1024/1024:.0f}MB buffer.")

except Exception as e:

print(f"Error during async copy: {e}")

async def main():

# Create dummy files for testing

# with open('async_file1.bin', 'wb') as f: f.seek(50 * 1024 * 1024 - 1); f.write(b'\0')

# with open('async_file2.bin', 'wb') as f: f.seek(50 * 1024 * 1024 - 1); f.write(b'\0')

# src_files = ['async_file1.bin', 'async_file2.bin']

# dst_files = ['async_file1_copied.bin', 'async_file2_copied.bin']

# if all(os.path.exists(f) for f in src_files):

# start_time = time.time()

# await asyncio.gather(

# async_copy_file(src_files[0], dst_files[0]),

# async_copy_file(src_files[1], dst_files[1])

# )

# print(f"Async copy of multiple files took {time.time() - start_time:.4f} seconds")

# for src, dst in zip(src_files, dst_files):

# os.remove(src)

# os.remove(dst)

pass

# if __name__ == '__main__':

# asyncio.run(main())

Asynchronous file copying using aiofiles and asyncio.

asyncio file operations, the aiofiles library is highly recommended as it provides async-compatible file I/O functions that integrate well with the asyncio event loop. Remember that asyncio is best for I/O-bound tasks, not CPU-bound tasks.4. Using sendfile() (Linux/Unix specific)

On Linux and Unix-like systems, the sendfile() system call can perform zero-copy data transfer between two file descriptors. This means data is moved directly from the kernel's page cache to the network socket or another file, without passing through user-space buffers. This can be extremely efficient for large files, as it avoids CPU cycles and memory copies. Python's os.sendfile() exposes this functionality.

import os

import shutil

import time

def copy_with_sendfile(src, dst):

"""Copies a file using os.sendfile (Linux/Unix specific)."""

if not hasattr(os, 'sendfile'):

print("os.sendfile is not available on this operating system.")

# Fallback to shutil.copy2 for cross-platform compatibility

shutil.copy2(src, dst)

print(f"Falling back to shutil.copy2 for '{src}' to '{dst}'.")

return

try:

# Open source file for reading, destination for writing

with open(src, 'rb') as fsrc:

with open(dst, 'wb') as fdst:

# Get file size

file_size = os.fstat(fsrc.fileno()).st_size

# Use sendfile to copy data

# The offset and count parameters can be used for partial copies

# Here, we copy the entire file

bytes_copied = os.sendfile(fdst.fileno(), fsrc.fileno(), 0, file_size)

print(f"Copied {bytes_copied} bytes from '{src}' to '{dst}' using os.sendfile.")

# sendfile does not copy metadata, so use shutil.copystat

shutil.copystat(src, dst)

print(f"Copied metadata for '{src}' to '{dst}'.")

except Exception as e:

print(f"Error copying file with os.sendfile: {e}")

# Consider falling back to shutil.copy2 on error as well

shutil.copy2(src, dst)

print(f"Falling back to shutil.copy2 for '{src}' to '{dst}' due to error.")

# Example Usage:

# Create a dummy large file for testing

# with open('sendfile_test.bin', 'wb') as f:

# f.seek(100 * 1024 * 1024 - 1) # 100 MB file

# f.write(b'\0')

# src_file = 'sendfile_test.bin'

# dst_file_sendfile = 'sendfile_test_copied.bin'

# if os.path.exists(src_file):

# start_time = time.time()

# copy_with_sendfile(src_file, dst_file_sendfile)

# print(f"os.sendfile operation took {time.time() - start_time:.4f} seconds")

# os.remove(src_file)

# os.remove(dst_file_sendfile)

Using os.sendfile() for zero-copy file transfers.

os.sendfile() is a Linux/Unix-specific function and is not available on Windows. Always include a fallback mechanism (like shutil.copy2()) for cross-platform compatibility if you plan to use this method.Conclusion

While shutil.copy() is convenient, its performance can be a limiting factor in certain scenarios. By understanding the underlying mechanisms and exploring alternatives, you can significantly improve file copying speeds in your Python applications. For most cases, adjusting the buffer size in a manual copy loop or leveraging OS-level utilities via subprocess will yield the best results. For highly specialized or concurrent applications, asyncio or os.sendfile() might be appropriate, but they come with increased complexity and platform-specific considerations. Always benchmark different approaches with your specific data and environment to determine the most effective solution.